In the ever-evolving realm of Silicon Valley, AI executives continue to grapple with the paradoxical balancing act between their unwavering optimism about AI’s potential and their growing concerns over its potential dangers.

James Manyika, a notable figure with a background in advising the Obama administration and now helming Google’s “tech and society” division, is emblematic of the complex attitudes held within the AI community. While he extols AI as a “remarkable, influential, and transformative technology,” he, like other luminaries in the field, such as OpenAI’s CEO Sam Altman, also conveys a sense of caution.

Manyika and several AI insiders lent his signature to a concise letter in May, affirming that safeguarding against AI-induced existential risks should be treated as a global imperative, equivalent in importance to other widescale societal threats like pandemics and nuclear warfare. However, Manyika’s recent conversation with The Washington Post (WaPo) revealed a certain ambiguity. He acknowledged that AI’s progression could lead to adverse outcomes, yet his stance appears intentionally open-ended, leaving a broad spectrum of possibilities unaddressed.

This balancing act performed by Manyika holds significance, given his influential status in the AI domain and his representation of Google’s professed commitment to an “audacious and responsible” approach to AI. In a blog post published in May, Manyika delineated this philosophy as striving to harness AI’s potential benefits while simultaneously addressing its challenges. He emphasized the need to embrace the inherent tension between bold innovation and conscientious responsibility for productive growth.

However, some critics quickly highlighted the vague nature of Manyika’s statements. Rebecca Johnson, an AI ethics researcher at the University of Sydney who recently collaborated with Google, expressed skepticism about the substance behind the rhetoric. She questioned the real implications of Manyika’s words, noting that they appeared to be more of a slogan than a substantive proclamation.

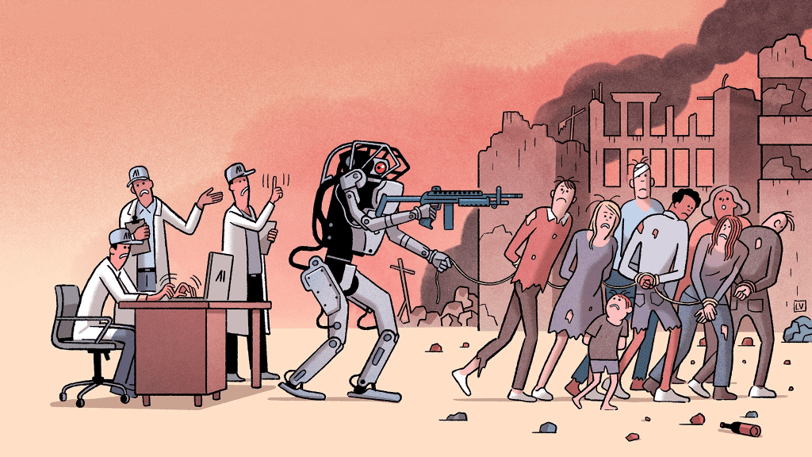

While it is understandable that AI leaders desire to preserve their credibility concerning AI’s future trajectory, forging ahead with an equivocal perspective on AI risks fails to instill confidence. Echoing empty talking points is insufficient, especially as numerous anticipated AI risks are manifesting in the real world. As AI’s impact becomes increasingly palpable, the AI community faces the challenge of balancing ambition and vigilance.

In conclusion, the dissonance within the AI community is evident through figures like James Manyika, who personify the intricacies of AI’s potential and perils. The ongoing discourse highlights the need for AI leaders to translate their rhetoric into concrete actions, particularly as AI-related risks materialize.

Only by reconciling optimism with a pragmatic assessment of potential hazards can the AI field navigate its path forward.