Facial recognition has been one of the goals of technology ever since machine learning was introduced. With myriads of models and techniques available now to make it work. It is a huge industry, that can be valuable in traffic, home systems, market research, emotion, and security.

Every rising technology has critics to debunk it. For emotion recognition, critics raise privacy concerns and some even go as far as to say that most implementations are racially biased. While we know that machine learning has to be taken with a pinch of salt when it comes to accuracy but exciting things are being developed.

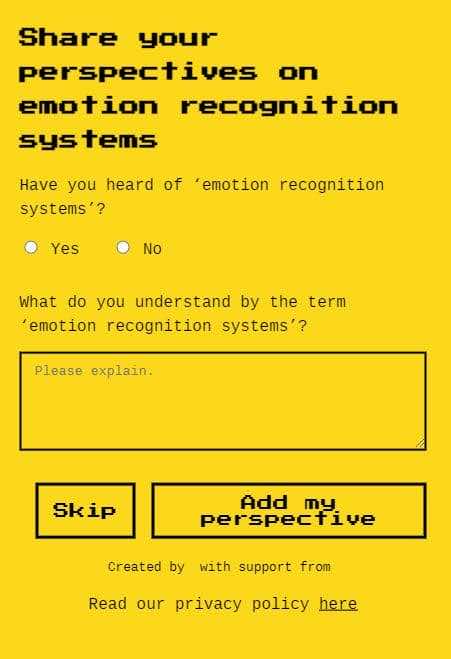

A team of researchers has created a web app called emojify.info where the public can check out how emotion recognition works using their camera or webcams. It has a few mini-games. One game is basically making faces trying to trick the model as much as possible and another game tells people how the model can struggle to read emotions when the context is involved.

Dr. Alexa Hagerty, the project lead and researcher at University of Cambridge Leverhulme Centre for the Future of Intelligence and the Centre for the Study of Existential Risk (kind of a hard title to swallow), said that “It is a form of facial recognition, but it goes farther because rather than just identifying people, it claims to read our emotions, our inner feelings from our faces”.

She also went on to say that “We need to be having a much wider public conversation and deliberation about these technologies”. In order to raise awareness about how the technology works. For example, the system is not reading emotion in the real sense. It just sees your facial expression and reads it. It then assumes which movements are linked to what emotion. Like a frown means a person is angry.

Vidushi Marda, senior program officer at the human rights organization Article 19 has said that it is crucial to press “pause” on the growing market for emotion recognition systems. “The use of emotion recognition technologies is deeply concerning as not only are these systems based on discriminatory and discredited science, their use is also fundamentally inconsistent with human rights,”.

Well, let’s just hope we don’t see a Skynet happening in the future, I don’t really see any issue with having emotion recognition especially since you can fake it easily.