Can you imagine that an application of smarter algorithms can make your laptop twice as fast? It seems odd, right? But that is exactly what new research carried out at the University of California, Riverside (UCR) promises. Not only would it make the laptops faster, but also more energy efficient.

Simultaneous and heterogeneous multithreading (SHMT) is an innovative process. It works on the principle that modern phones, computers, and other gadgets usually rely on multiple processors to do their thinking. The system on which the test was run combined three types of processing units.

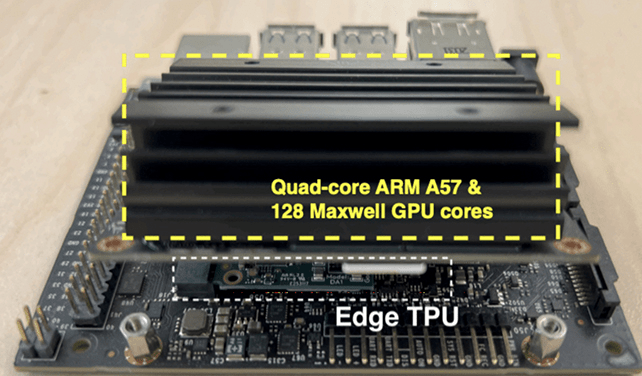

“You don’t have to add new processors because you already have them,” says computer engineer Hung-Wei Tseng from UCR. A system already has three processors: the graphics processing unit (GPU), one for machine learning, known as a tensor processing unit (TPU), and the central processing unit for handling general computing calculations.

The idea behind the concept is to run subtasks simultaneously on different processors. By running more subtasks simultaneously in parallel across multiple processors, the researchers hope to regain lost time and energy. While running the tests, the team had an ARM Cortex-A57 CPU, an Nvidia GPU, and a Google Edge TPU. The results were in line with what is already mentioned above: the response was 1.95% faster, and 51% energy was saved.

“The entrenched programming models focus on using only the most efficient processing units for each code region, underutilizing the processing power within heterogeneous computers,” write the researchers in their paper.

However promising the experiment may sound at the moment, the researchers also understand that significant issues must be resolved to make it a success and take it to the commercial level the team wants it to be on.

“Conventional homogeneous simultaneous multithreading hardware does not need to cope with quality assurance,” write the researchers, “In contrast, SHMT has to ensure quality because of the potential precision mismatch of underlying architectures.”