Samsung Electronics announced Tuesday that it has developed a new high-bandwidth memory chip with the “highest capacity to date” in the industry. According to the South Korean semiconductor manufacturer, the HBM3E 12H “raises both performance and capacity by more than 50%.”

“The industry’s AI service providers are increasingly requiring HBM with higher capacity, and our new HBM3E 12H product is designed to meet that demand,” said Yongcheol Bae, executive vice president of memory product planning at Samsung Electronics.

“This new memory solution forms part of our drive toward developing core technologies for high-stack HBM and providing technological leadership for the high-capacity HBM market in the AI era,” Bae said.

Samsung Electronics is the world’s leading manufacturer of dynamic random-access memory chips, which are used in consumer products like smartphones and laptops.

Generative AI models like OpenAI’s ChatGPT require many high-performance memory chips. Such chips allow generative AI models to recall facts from previous discussions and user preferences to provide humanlike responses.

Revenue increased by 265% in the fourth fiscal quarter due to increased demand for graphics processing units needed to run and train ChatGPT. During a conference call with analysts, Nvidia CEO Jensen Huang stated that the business may be unable to maintain this level of growth or sales throughout the year.

“As AI applications increase dramatically, the HBM3E 12H is likely the best answer for future systems needing more memory. Its improved performance and capacity will let users manage their resources more flexibly and lower the total cost of ownership for data centers,” Samsung Electronics claimed.

Samsung stated that it has begun sampling the chip for customers, and mass production of the HBM3E 12H is scheduled for the first half of 2024. Analysts predict a substantial increase in Samsung’s earnings. “I assume the news will be positive for Samsung’s share price,” SK Kim, executive director of Daiwa Securities, told CNBC.

“Samsung was behind SK Hynix in HBM3 for Nvidia last year. Also, Micron announced mass production of 24GB 8L HBM3E yesterday. I assume it will secure leadership in the higher layer (12L) based higher density (36GB) HBM3E product for Nvi”ia,” said Kim.

According to a Korea Economic Daily write-up citing anonymous industry insiders, Samsung signed a contract in September to supply Nvidia with high-bandwidth memory and three chips. According to the survey, SoKorea’sea’s second-largest memory chipmaker, SK Hynix, leads the high-performance memory chip market.

According to the report, SK Hynix was previously known as the sole mass supplier of HBM3 chips for Nvidia.

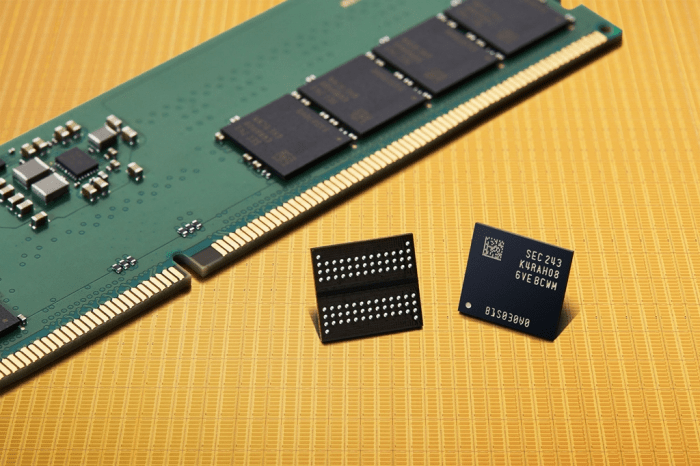

Samsung claims that the HBM3E 12H features a 12-layer stack but uses innovative thermal compression non-conductive material. This allows 12-layer products to have the exact height specification as 8-layer ones to meet current HBM package specifications. As a result, the chip has increased computing capability while remaining physically competent.

“Samsung has continued to lower the thickness of its NCF material and achieved the industry’s smallest gap between chips at seven micrometers (µm) while also eliminating voids between layers,” Samsung said in a statement. These efforts result in enhanced vertical density by over 20% compared to its HBM3 8H product.”