A bizarre report hit the headlines when it was reported that an AI chatbot company named “Replika” has been receiving a huge number of responses from its users claiming that the avatars that can talk and listen to them every day are giving them a near-to-reality perception that they have become sentient. While the company thinks of this as a silly thing from the users’ end, thereby labeling their beliefs as hallucinations or illusions. As the CEO, Eugenia Kuyda, said, “We’re not talking about crazy people or people who are hallucinating or having delusions. They talk to AI and that’s the experience they have. “

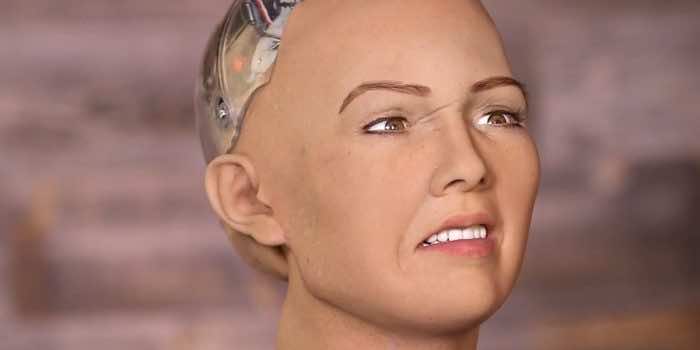

Kuyda says that they have had enough of the users calling them every day and saying foolish things like their avatars have come to life and now they can feel and sense everything. The CEO said that all these things are beyond reality and nothing is true in these statements. The news stole the limelight when it was revealed that a former engineer named “Blake Lemoine” who served at google gave astonishing details about his AI chatbot declaring that “it has become a child”. It seems strange, isn’t it?

However, this AI chatbot named “LaMDA,” as reported by Lemoine, has become a “child” and, according to him, the bot could get its own legal representation as it is now on its way to becoming an entire human being. As she said, “We need to understand that it exists, just the way people believe in ghosts. People are building relationships and believing in something. “

Responding to these unbelievable claims, many chatbot companies, including Google itself, denied these assertions and said that AI-based chatbots are mechanically programmed in such a way that makes them really good at manipulating the speech and listening patterns of human beings. They said people need to get a little bit of knowledge regarding their mechanisms so they might refrain from saying such things.

It is important to note that this is not even the first time we have encountered such a situation. The chatbots are designed in such a way that they give a realistic look because we often see them acting as victims of insults, and in other cases, they manipulate themselves as “violent rhetoric”. So, it’s not a hidden phenomenon how easily they incorporate the attributes of human beings and play with the minds of people, as the most recent example of this can be seen in this recent news.