Imagine waking to find that you have grown into a six-legged insect. Even though it might be unpleasant, if you give it a shot, you may want to find out what your new body is capable of. In addition, you might be able to get used to this new shape with time.

This amazing idea is similar to the one some technologists want to use to create better robots. As an example, a Columbia engineering team developed a robot that could discover through experience what its own shape is capable of.

“The idea is that robots need to take care of themselves,” says Boyuan Chen, a roboticist at Duke University. “In order to do that, we want a robot to understand its body.”

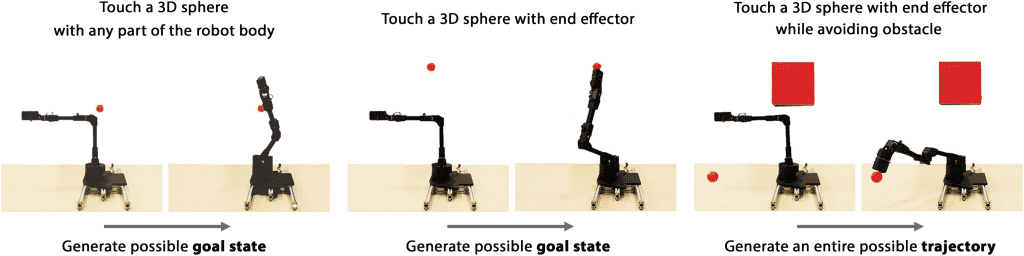

The robot has a single arm on a table, flanked by a bank of five video cameras. It had access to the camera feeds, which allowed it to observe itself as if it were in a room full of mirrors. First, the researchers gave it the simple job of touching an adjacent sphere.

The robot is built using a neural network. This helped human observers prepare for the machine’s actions as well. For instance, I f the robot thought its arm was shorter than it was, its controllers could prevent it from mistakenly injuring a bystander.

The robot began to understand the effects of its movements, much like babies moving their limbs. For example, it would know whether it would hit the sphere if it rotated its end or pushed it back and forth. After about three hours of training, the robot was familiar enough with the restrictions of its material shell to touch that spherical easily.

“Put simply, this robot has an inner eye and an inner monologue: It can see what it looks like from the outside, and it can reason with itself about how actions it has to perform would pan out in reality,” explains Josh Bongard, a roboticist at the University of Vermont who has previously collaborated with the paper’s authors.

Like many other forms of AI, running a simulation and having robots learn within it necessitates a large computational capacity. Then both the financial and environmental costs also build up.

However, letting a robot teach itself in real life opens up many new possibilities. It requires less calculation and is similar to how we learn to observe our own changing bodies.

“We have a coherent understanding of our self-body, what we can and cannot do, and once we figure this out, we carry over and update the abilities of our self-body every day,” Chen says.

Robots in environments inaccessible to humans, for as deep inside or outside the Earth’s atmosphere, may benefit from this concept. In addition, robots might employ these skills in regular interactions. For instance, an industrial robot might be able to recognize a problem and adjust its routine accordingly.

The researchers’ arm is merely a primitive first step in that direction. That is a great leap from the anatomy of even a simple animal, let alone a human.

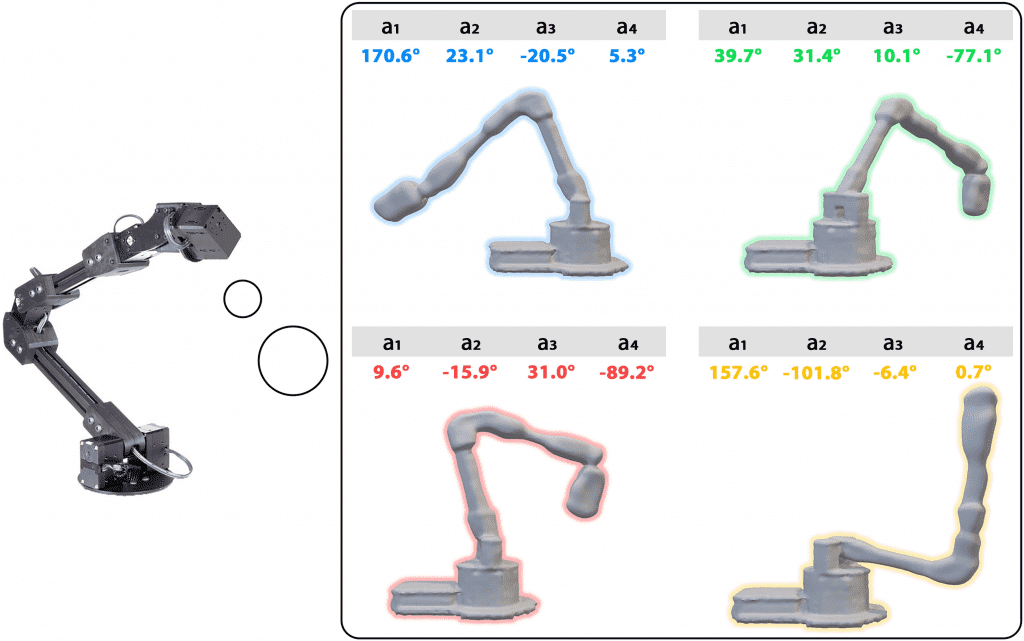

The machine, to be specific, has only four degrees of freedom, which means it can only perform four different motions. The scientists are currently developing a robot with twelve degrees of freedom.

“The more complex you are, the more you need this self-model to make predictions. You can’t just guess your way through the future,” believes Hod Lipson, a mechanical engineer at Columbia University and one of the paper’s authors.

“We’ll have to figure out how to do this with increasingly complex systems.”

For Lipson, making a robot that understands its own body is more than just constructing smarter robots in the future. He believes his team has produced a robot that recognizes its limitations and strengths.

“To me,” Lipson says, “this is the first step towards sentient robotics.”

The study was published in the journal Science Robotics on July 13.