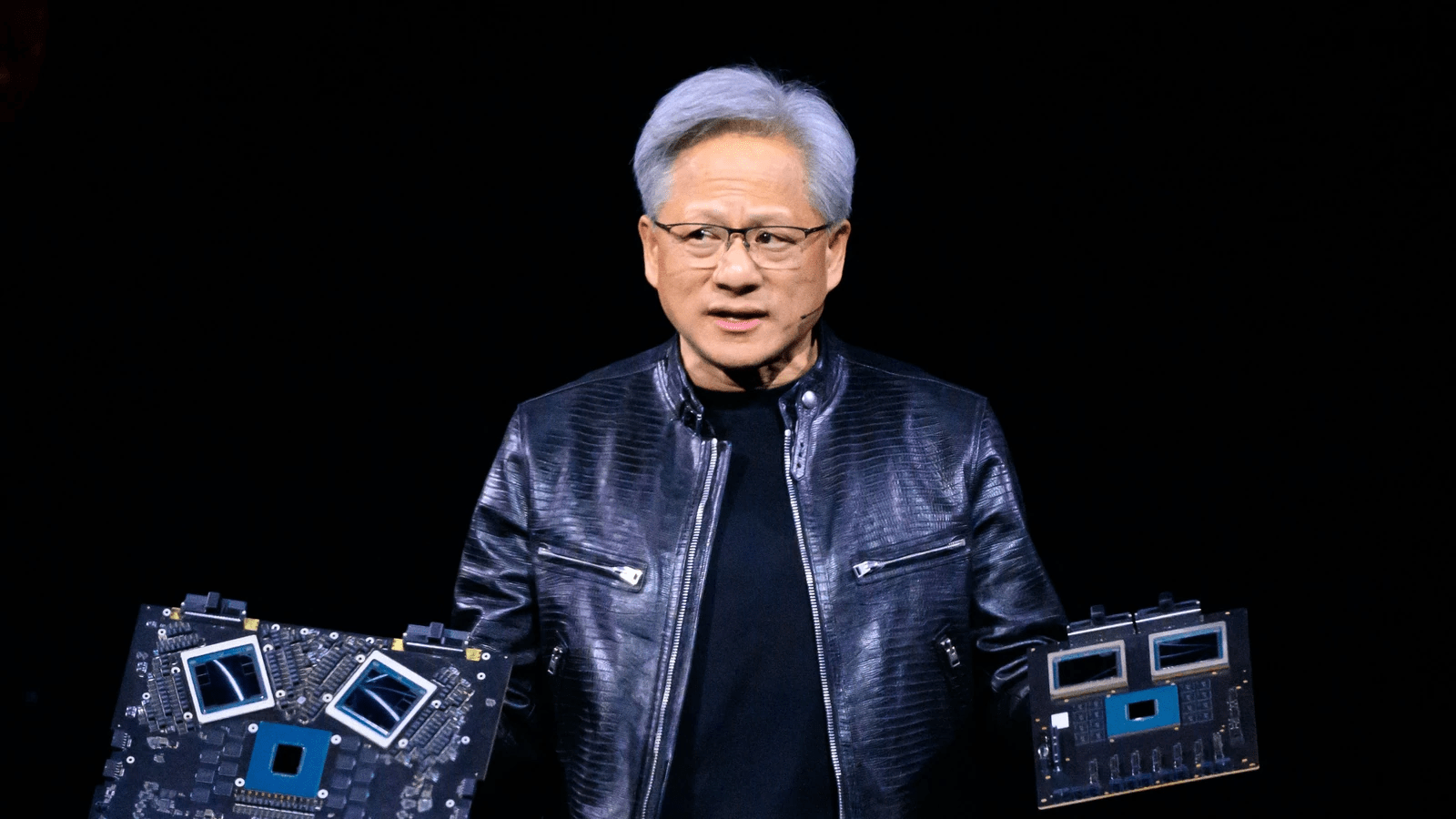

Nvidia, a leading American chipmaker, has unveiled its latest Blackwell computing platform, set to revolutionize the next generation of AI technologies for enterprises. Revealed at the firm’s annual GTC conference in San Jose, California, on March 18, the platform is named after David Harold Blackwell, a renowned mathematician specializing in statistics and game theory.

The Blackwell architecture aims to empower organizations to deploy real-time generative AI on trillion-parameter language models with significantly reduced cost and energy consumption, claiming to offer 25 times less cost and energy than before. Nvidia CEO and founder Jensen Huang stated, “Generative AI is the defining technology of our time. Blackwell is the engine to power this new industrial revolution.”

The Blackwell GPU architecture boasts six revolutionary accelerated computing technologies, enabling Nvidia to take advantage of new business opportunities in various sectors such as data processing, engineering simulation, electronic design automation, and generative artificial intelligence. With features like micro-tensor scaling, advanced dynamic range management, and support for encryption protocols, Blackwell is designed to enhance system uptime, resilience, and security for large-scale AI deployments.

The new architecture also includes the latest NVLink interface, which enables seamless communication between up to 576 GPUs for complex models. Additionally, Nvidia also announced the Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms, designed to connect GB200-supported systems to achieve high AI performance.

Nvidia GB200 NVL72 is a multi-core, liquid-cooled, single-scale system that leverages Blackwell to provide computing power for trillion-parameter models. The system includes 36 Grace Blackwell Super Chips connected to fifth-generation NVLink and NVIDIA BlueField-3 data processing to easily support GPU processing in the ultra-large-scale AI cloud.

Nvidia also announced Nvidia DGX SuperPOD, its next-generation AI supercomputer powered by the Nvidia GB200 Grace Blackwell superchip. DGX SuperPOD is designed to handle trillions of models; this is the ability to enable ultra-scale AI training and reasoning operations with long run times.

Nvidia’s Blackwell chips will be certified by cloud service providers, AI startups, consumers and local suppliers. Cloud service providers and telecommunication companies are making advances in artificial intelligence technology.