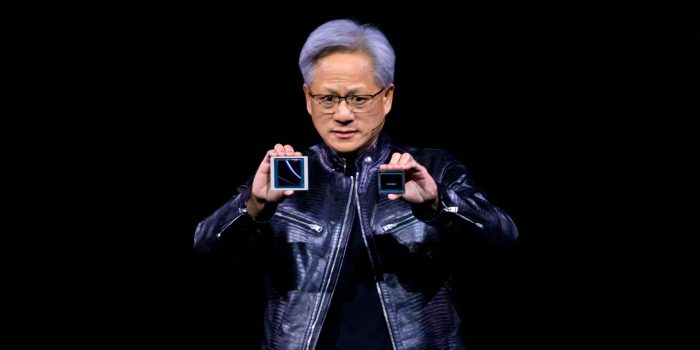

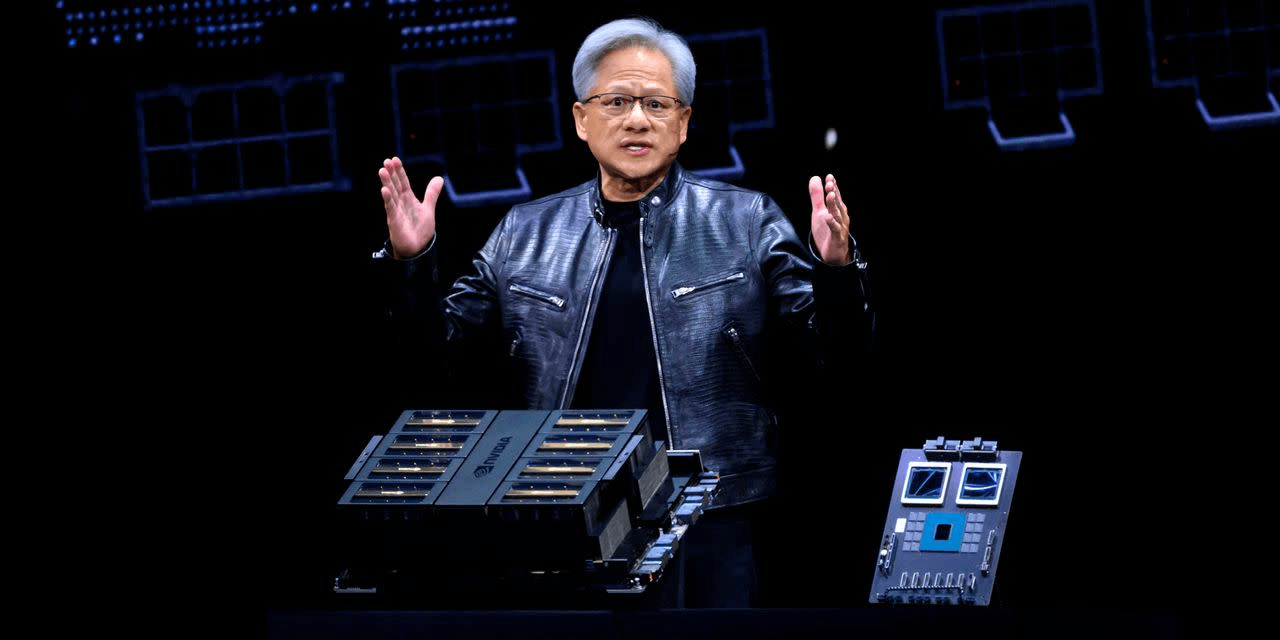

At Nvidia’s annual GTC developer conference, CEO Jensen Huang proposed the concept of artificial general intelligence (AGI), often referred to as “strong intelligence” or “human-level intelligence.” AGI, unlike narrow AI, is designed for specific tasks and aims to realize a broad range of intelligence that reaches or exceeds humans. However, Huang expressed concern that the content was mostly sensational, leading to misquotes and misunderstandings.

One of the main concerns surrounding AGI is the possible uncertainty of decision-making processes that may differ from human values. This concern has been explored in science fiction for years. When asked about the AGI’s timeline, Huang emphasized the importance of clarifying the AGI. This could be completed within five years if AGI is committed to passing a specific test, such as a certification test or a logic test, he said.

During the Q&A session, Huang was asked about the illusion of intelligence, where intelligence provides plausible but not fact-based answers. This problem can be solved by making sure the answer is well researched, Huang said. He proposed a method called “augmented generation by retrieval,” in which smart people search for answers before giving them. Huang recommends making sure by checking multiple sites for important answers like health tips.

After all, Huang’s insight points to the complexity and sophistication of AGI development. Although the benefits of general intelligence are great, it is also important in terms of morality and thought. As the field of artificial intelligence continues to evolve, it is important that these issues are addressed responsibly to ensure that the development of general intelligence serves humanity.