Ever wondered who’s actually better? Robots or their creators, the humans? Well, say no more. This group of researchers has developed a model that can predict just how humans and robots would react to a given situation and how much can they be trusted to handle the said situation. It gives us a nice look into just how much good can a robot be at a human’s job.

The researchers at the University of Michigan have developed a bi-directional model that can be used to predict how humans and robots perform in tasks where human-robot collaboration is required. The study was presented in a paper published in IEEE Robotics and Automation Letters.

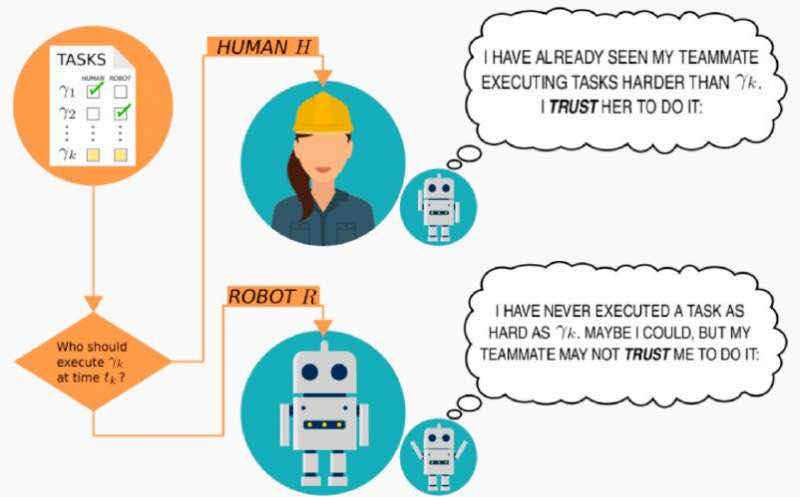

The study aims to help companies allocate tasks to robots and humans more effectively. According to Herbert Azevedo-Sa who is part of the research team, “There has been a lot of research aimed at understanding why humans should or should not trust robots, but unfortunately, we know much less about why robots should or should not trust humans”. Collaborative tasks can be tricky for robots since the concept of trust is unknown to be unless well someone programs that in but that’s highly unlikely. The model designates humans and robots as agents.

Herbert gave further details on this concept saying that “In truly collaborative work, however, trust needs to go in both directions. With this in mind, we wanted to build robots that can interact with and build trust in humans or in other agents, similarly to a pair of co-workers that collaborate”. Generally, when humans work in teams, it takes some time before the team reaches its true potential as the member are still building up trust with one another. Robots could do that instantaneously if trained.

The researchers tried to replicate processes through which humans learn to build trust and find out what tasks others can be trusted with or not. The model built by the team can be used to represent both human trust and robot trust so it is able to make predictions about just how much a human and a robot can trust each other and on what tasks.

Herbert talked about this point saying that “One of the big differences between trusting a human versus a robot is that humans can have the ability to perform a task well but lack the integrity and benevolence to perform the task. For example, a human co-worker could be capable of performing a task well, but not show up for work when they were supposed to or simply not care about doing a good job. A robot should thus incorporate this into its estimation of trust in the human, while a human only needs to consider whether the robot has the ability to perform the task well”.

The model is able to provide a general representation of an agent’s capabilities. This representation includes information like its aptitude, abilities, and trust factors. If an agent is predicted to be able to complete a task then his trust score increases but if the prediction is against the agent then the model’s trust in the agent is lowered.