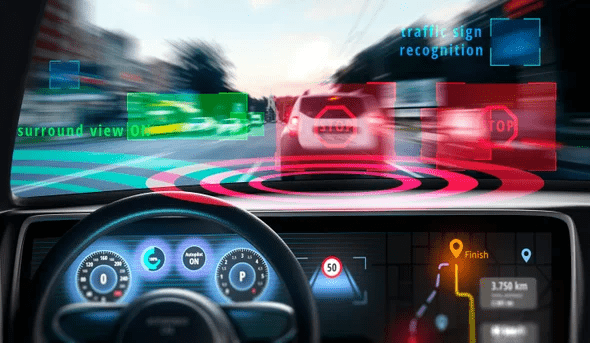

AI, with all its benefits, brings in scores of problems for common people and government officials. For instance, what to do with all the crashes and accidents happening with Tesla’s self-driving feature. Who to hold responsible, who is to be held accountable and who is to be answerable in the court of law, the owner or the developer? A California jury may soon have to decide.

AI challenges conventional norms of liability. For example, how do we hold anyone liable when a “black box” algorithm—where no one knows what goes into the calculation or prediction — recommends a treatment in the medical field that ultimately causes harm or drives a car frantically before its human driver can react? Is that the doctor or driver’s fault? Is it the company that shaped the AI’s fault? And what liability should everyone else—health systems, insurers, manufacturers, regulators—face if they encouraged adoption? These are questions that need to be answered, and that too in a r=early timeframe, as to establishing the responsible use of AI in consumer products.

Like all unruly technologies, AI is powerful. If properly created, monitored, and tested, AI algorithms can assist in diagnosis, market research, predictive analytics and any application that requires analyzing large data sets. McKinsey’s global survey indicated that already over half of companies worldwide reported using AI in their routine operations.

Yet, liability too often focuses on the easiest prey: the end-user. As a result, liability inquiries usually start—and end—with the driver of the car that crashed or the physician that made a wrong treatment decision.

It is understandable that end-users are given warnings and issued notifications from time to time to make a sound and timely decision. Having said that, AI errors are often not the end-user’s fault. Who can fault an emergency room physician for an AI algorithm that misses papilledema—a retina swelling? An AI’s failure to detect the condition could delay care and cause a patient to go blind. It is without a doubt that AI has immense potential, and if end users are the only ones to be held accountable, it will hamper AI’s utility.

The key is to ensure that all stakeholders—users, developers, and everyone else that forms part of the chain from product development to use—be accountable enough to ensure AI safety and effectiveness—but not so much that they give up on AI.

We propose three ways to revamp traditional liability frameworks.

Firstly, insurers must guard policyholders from the disproportionate costs of being sued over an AI injury. An independent safety system can provide AI investors with a predictable liability system that adjusts to new technologies and methods.

Second, some AI errors should be prosecuted in special courts with expertise in arbitrating AI cases. For example, in the U.S., specialist courts have protected childhood vaccine manufacturers for decades by adjudicating vaccine injuries and developing a deep knowledge of the field.

Third, regulatory standards from federal authorities like the U.S. Food and Drug Administration (FDA) or NHTSA could offset surplus liability for developers and some end-users. For example, federal regulations and legislation have replaced certain forms of liability for medical devices or pesticides. Regulators should deem some AIs too risky to introduce into the market without testing, retesting or validation standards.

Hampering AI with an outdated liability system would be unjust. Self-driving cars are the future and provide mobility to many people who require transportation access. AI will help physicians choose more effective remedies and improve patient outcomes in health care. Industries varying from finance to cybersecurity are on the verge of AI revolutions that could benefit billions worldwide. But 21st-century AI demands a 21st-century liability system so that maximum benefits can be reaped from the AI.