Catching all the small edges and feeding them into the autonomous driving systems is more complicated than Elon Musk and Co. must have thought.

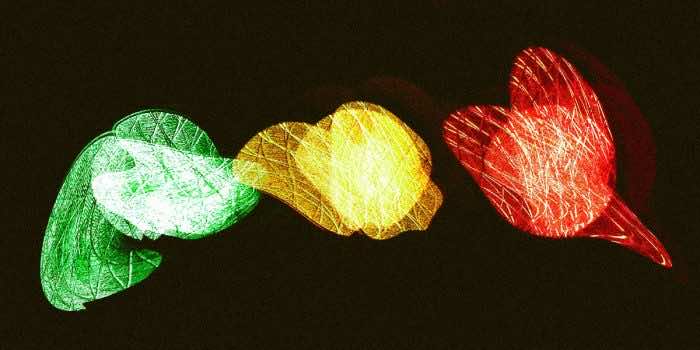

A Tesla Model 3 whilst on the highway started showing traffic lights, while the car was moving at 130km/h. The car set on autonomous mode detected a continuous flow of traffic lights which in actuality was a truck hauling deactivated traffic lights, which the Model 3 couldn’t really comprehend because of the data fed into its systems.

The traffic lights stayed at a constant distance while the Model 3 traveled at speeds more than 80 MPH and that is because the truck hauling traffic lights also traveled at similar speeds and stayed ahead of the Tesla, pointing out the autonomous driving mode for another fresh glitch.

In many instances, people have reported Tesla’s autonomous driving systems failing, and that is because it is near impossible to feed in all the eventualities, there is always room for improvement. The developers of the autonomous driving systems have also strongly realized this fact that the real world is far different from the test tracks, and that it is not necessary if an autonomous system performed well on the tracks isn’t a risk for the outside world.

Users post their stories online, of what they reveal, with a probable hashtag of another Tesla glitch. The issue is not only limited to finding out the shortcomings of autonomous driving tech, instead, but it is also far beyond that because people have lost their lives after putting complete trust into the auto-drive mode.

“I guess this scenario was probably not part of the system’s training data,” University of Birmingham and MIT mathematician Max Little said on Twitter. “A good illustration of how it will likely be impossible to reach full driving autonomy just by recording ‘more data.’”

The driver who found the latest glitch revealed that Tesla’s and autonomous driving systems could fail with a deadly result because of their inability to translate what is going around, and cannot match the level of the human brain and its power to make decisions. Thankfully, this once the system didn’t apply a sudden break while rolling on the highway, probably because it kept on assessing the distance from the flowing traffic lights.