In a significant leap forward in robotics, the University of Tokyo’s latest creation, Alter3, emerges as a highly advanced humanoid robot fueled by artificial intelligence. Employing Open AI’s cutting-edge tool, the Large Language Model (LLM) GPT-4, Alter3, showcases unprecedented capabilities by dynamically generating spontaneous motions.

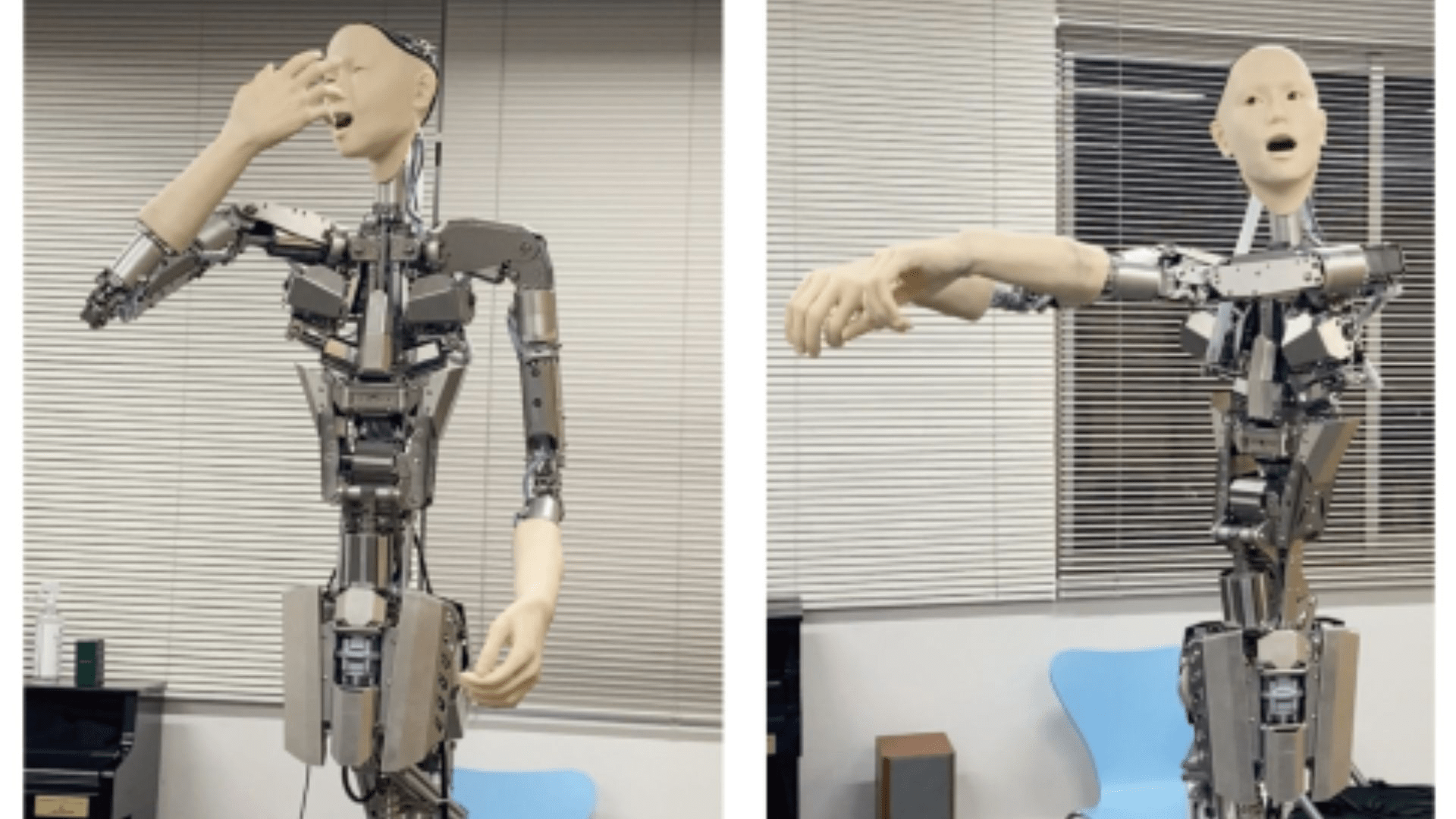

Alter3’s groundbreaking abilities are rooted in its integration of GPT-4, allowing it to assume diverse poses, from casual selfies to playful imitations of a ghost. Unlike traditional robots requiring pre-programmed entries, Alter3 autonomously generates actions, responding dynamically to conversational cues. The University of Tokyo team describes this as a significant advancement, detailed in their research paper published in Arxiv.

Traditionally, merging Large Language Models (LLMs) with robots has focused on refining communication and simulating lifelike responses. However, the Japanese team takes it a step further by enabling LLMs to comprehend and execute complex instructions, enhancing the autonomy and functionality of robots. To overcome challenges associated with low-level robot control and hardware limitations, the team devised a method to translate human movement expressions into executable code for android.

During interactions, Alter3 can receive human commands like “Take a selfie with your iPhone” and, through queries to GPT-4, autonomously translate them into Python code for execution. This groundbreaking approach liberates developers from manually programming each body part, allowing users to effortlessly modify poses or specify distinctions.

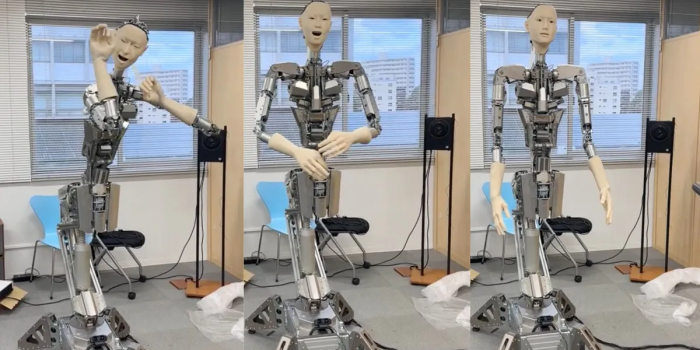

While Alter3’s lower body remains stationary, affixed to a stand, limiting its current capabilities, the innovation in upper-body motion is noteworthy. The robot, equipped with 43 actuators for facial expressions and limb movements, mimics human poses and emotions with unprecedented fluidity. The integration of GPT-4 has liberated the robot from meticulous manual control, fostering more nuanced and contextually relevant interactions.

The team envisions Alter3 effectively manifesting contextually relevant facial expressions and gestures, showcasing the potential for emotionally resonant interactions in humanoid robotics.