The emergence of AI-generated true crime victim videos on platforms like TikTok has raised ethical concerns and sparked a debate about the boundaries of entertainment and respect for the victims and their families. These videos feature AI-generated personas of murder victims recounting their gruesome deaths, often without content warnings or the consent of the families involved.

The videos, which receive millions of views, are made with the intention of stirring up strong emotions and cashing in on people’s interest in true crime. They have drawn criticism, meanwhile, for being disturbing and having the ability to retraumatize the victims’ loved ones. Paul Bleakley, an assistant professor of criminal justice, says that it is uncomfortable to view these recordings and speculates that it might be done on purpose to draw attention.

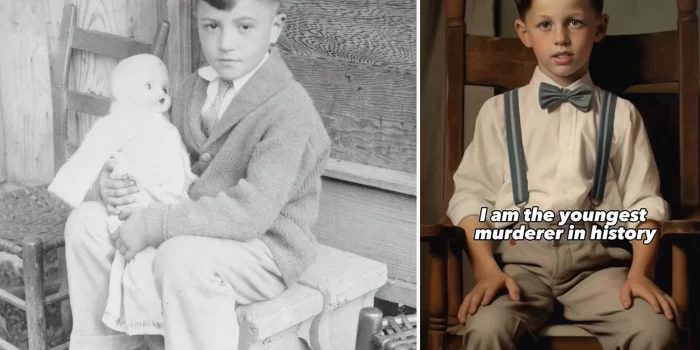

To circumvent potential legal issues and comply with TikTok’s guidelines, the accounts producing these videos use AI-generated images that do not resemble the actual victims. While this may seem like a way to “respect the family,” it also raises questions about the platform’s role in regulating such content and the potential harm it may cause.

The proliferation of AI true crime victim videos on TikTok adds to the ongoing debate surrounding the ethics of the true crime genre as a whole. Critics argue that consuming real-life stories of assaults and murders for entertainment purposes may trivialize the gravity of these crimes and retraumatize the families of the victims.

Furthermore, the creation of deepfake videos raises legal concerns. Although no federal laws explicitly address nonconsensual deepfake videos, some states have banned deepfake pornography, and there are calls for legislation to criminalize their dissemination. In the case of AI true crime videos, grieving families might consider pursuing civil litigation, particularly if the videos are monetized.

The future of true crime material is still up in the air due to the quick advancement of AI technology. Creators might be able to replicate not just the voices of criminals but also their graphic elements as AI capabilities advance. It is hard to foresee how far this trend will go because there are no laws governing this technology.

In the end, it is crucial to strike a balance between the public’s interest in true crime and the rights and well-being of the victims and their families. The ethical implications and potential harm caused by AI-generated true crime victim videos highlight the need for responsible content creation and a thoughtful approach to the evolving world of AI technology.