Researchers from Universidad Complutense de Madrid (UCM) and Universidad Carlos III de Madrid (UC3M) have unveiled a pioneering advancement at the intersection of artificial intelligence and robotics. Their collaborative efforts have resulted in a deep learning-based model that empowers a humanoid robot to engage in real-time sketching, mirroring the creative process of a human artist.

Unlike conventional AI-generated art, which often relies on algorithms, this humanoid robot employs deep reinforcement learning techniques to craft sketches stroke by stroke, replicating the nuanced approach of human drawing. Raúl Fernandez-Fernandez, a paper co-author, clarified that their objective was not to create a robot capable of intricate paintings but rather to establish a physically adept robot painter.

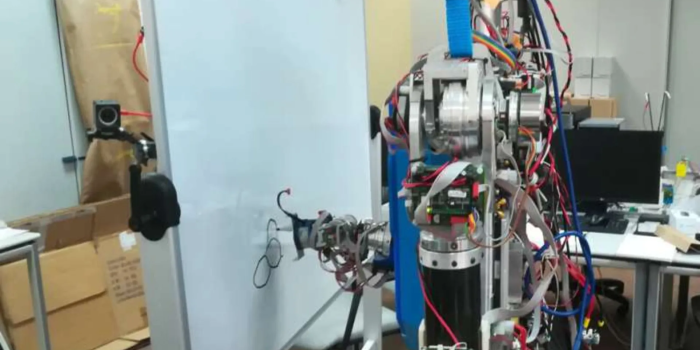

Inspired by previous works, the researchers tapped into the potential of the Quick Draw Dataset for training robotic painters. They incorporated Deep-Q-Learning to execute complex trajectories with emotional features. The resulting robotic sketching system integrates a Deep-Q-Learning framework, intricately planning robot actions for precise manual tasks in diverse environments.

The architectural framework comprises three interconnected networks: a global network extracting high-level features, a local network capturing low-level features around the painting position, and an output network generating subsequent painting positions. Additional channels, providing distance and tool information, guide the training process and enhance the robot’s sketching capabilities.

To emulate human-like painting skills, the researchers introduced a pre-training step using a random stroke generator, double Q-learning to address overestimation issues, and a custom reward function. They also incorporated a sketch classification network for flexibility in the painting process.

Overcoming the challenge of translating AI-generated images to real-world canvases, the team created a discretized virtual space within the physical canvas. This enabled the robot to move and translate painting positions provided by the model.

Fernandez-Fernandez emphasized the significance of introducing advanced control algorithms into real robot painting applications. He expressed confidence in the potential of Deep Q-Learning frameworks for original and high-level applications beyond classical problems.

The researchers anticipate their work inspiring further studies, with plans to explore integrating emotions into robot control applications. Published in the Journal Cognitive Systems Research, this development marks a significant stride in the convergence of AI and robotics, unlocking possibilities for robots to partake in creative processes resembling human artistic endeavors.

As technology advances, artificial intelligence and physical robotics synergy is poised to yield innovative applications beyond conventional problem-solving.