The article discusses a significant development in humanoid robotics, particularly the capabilities of Figure’s Brett Adcock’s robot, referred to as the “01 robot.” According to Adcock, the robot has achieved a “ChatGPT moment,” meaning it can now observe humans performing tasks, build its own understanding of how to perform them, and autonomously execute those tasks.

The demand for general-purpose humanoid robots capable of handling various jobs is emphasized. These robots need to comprehend tools, devices, objects, techniques, and objectives that humans use in diverse dynamic working environments. The key is their ability to be flexible, adaptable, and capable of learning new tasks without constant programming assistance.

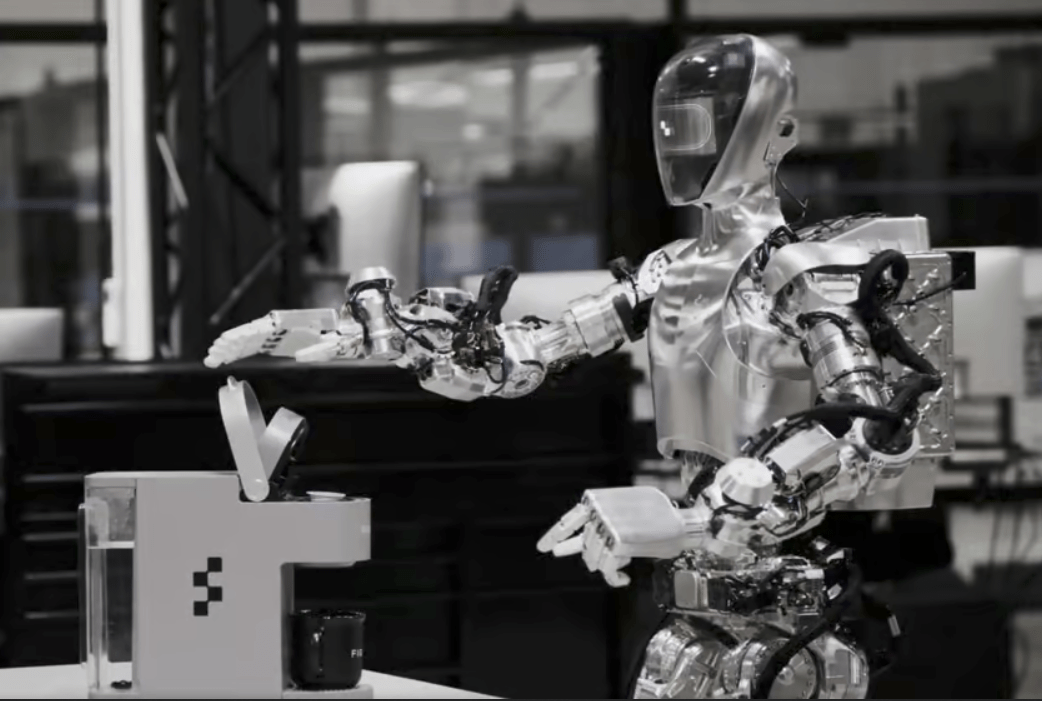

The article highlights the distinction between Figure and other companies, like Toyota, that use bench-based robot arms for research purposes. In contrast, Figure, along with companies like Tesla and Agility, focuses on developing self-sufficient full-body humanoids intended for practical use in any workplace. Adcock expresses a goal to have the 01 robot deployed and performing useful tasks around Figure’s premises by the end of 2023, showcasing a commitment to practical applications rather than just research.

The breakthrough demonstrated by Figure’s robot lies in its watch-and-learn capability. The robot, after studying a video for 10 hours, successfully operates a Keurig coffee machine with a verbal command. While the task may seem simplistic, the significance is in the autonomy achieved by the robot. It can now add new actions to its repertoire, which can be shared with other Figure robots through swarm learning.

The article anticipates that, if the learning process proves effective across a range of tasks, similar demonstrations could become frequent. Tasks may vary from peeling bananas to using tools like spanners, drills, angle grinders, and screwdrivers. The ultimate vision is for these humanoid robots to perform complex tasks, such as making coffee and delivering it without spilling, leveraging both their physical capabilities and Large Language Model AI.

The author encourages readers not to focus solely on the demonstrated coffee-making task but to watch this space for potential advancements. The expectation is that if Figure’s robot truly possesses the ability to watch and learn, 2024 could witness a significant acceleration in the commercial deployment of humanoid robotics.

The article concludes by reflecting on the revolutionary nature of humanoid robots. It suggests that, with the rise of language model AIs like GPT, human intelligence may not be as special as it once was. The integration of humanoid robots into society could lead to a profound technological and societal upheaval, potentially on par with historical revolutions. The author expresses a sense of unease about the implications of this rapid technological advancement, contemplating the potential for a future where human labor is significantly replaced by these advanced robotic entities.