A millimeter may not appear to be much. However, even a small distance can cause a change in time.

According to Einstein’s theory of gravity, general relativity, clocks tick faster the farther they are from Earth or another huge object. Theoretically, this should hold true even for slight changes in clock heights. Now, an exceptionally sensitive atomic clock has detected the speedup across a millimeter-sized sample of atoms, indicating the effect over a smaller height difference than previously observed. According to a recent study, time flowed slightly faster at the top of the sample than at the bottom.

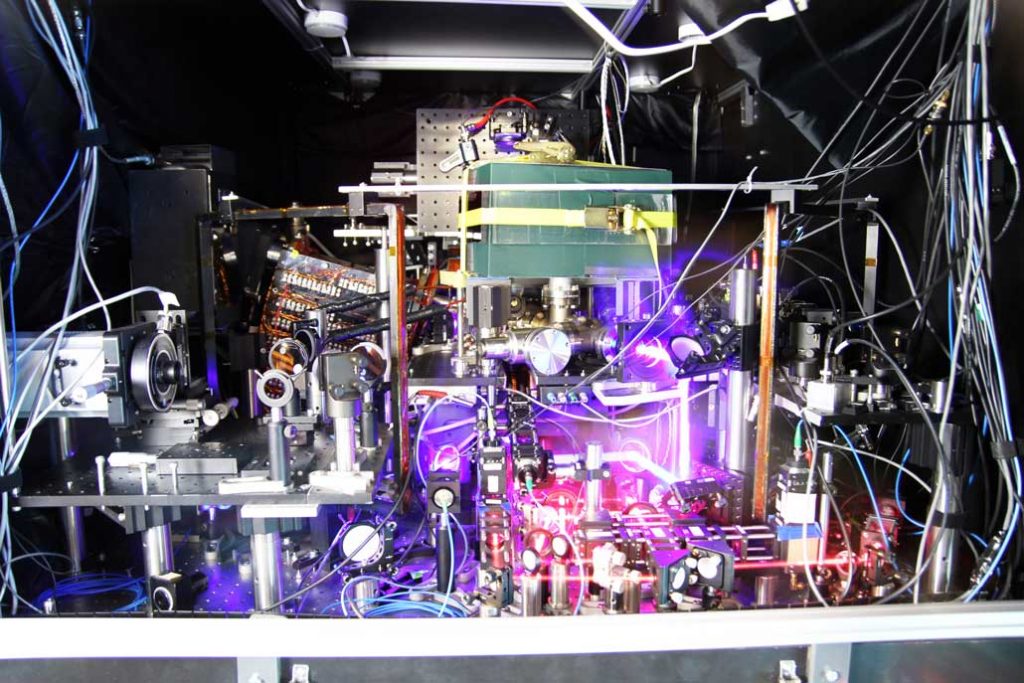

In the study, physicist Jun Ye of JILA in Boulder, Colorado, and colleagues employed a clock constructed of 100,000 ultracold strontium atoms. Those atoms were arranged in a lattice, which means they sat at various heights as if standing on the rungs of a ladder. A difference was discovered after mapping out how the frequency-shifted throughout certain heights.

This difference was caused by the gravitational redshift, which is induced by the influence of gravity on the frequency of two identical waves when compared to one another. So the researchers were not much surprised by the figure, no matter how small it looked.

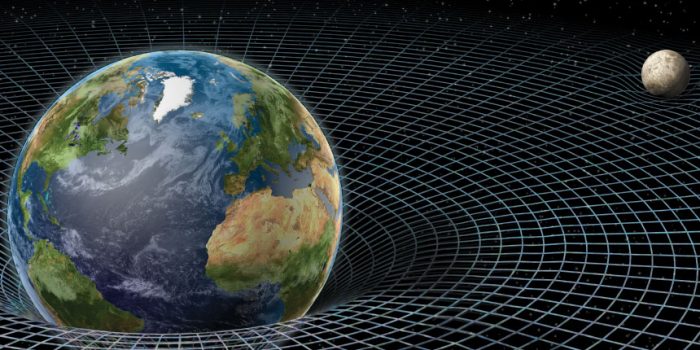

The Universe exists in a single four-dimensional sheet made up of these two independent constants of space and time. When something with mass drops into spacetime, it changes its shape.

Therefore, the length of a second near an object, such as Earth, differs from the length of a second at a distance. Even when gravitational warping is as moderate as Earth’s, the calculations are precise and well-tested enough to predict this difference across extremely short distances.

However, there is a chance that they are factually incorrect. Quantum mechanics is another field of physics that has been rigorously examined. One of its ramifications is that limiting one sort of measurement reduces the accuracy of other attributes. The two monolithic disciplines of physics, as reliable as they are, do not get along. For one thing, time in quantum physics isn’t as important as it is in general relativity.

Fundamentally, the seamless sheet of spacetime curving ever so gently for general relativity would be a mess under a quantum microscope due to the obvious issue with less specific properties described above. Anyone looking for a way to merge the two ideas would face a dilemma. Therefore, we need proof of either hypothesis failing, which could entail figuring out where our predictions go wrong.

A few years ago, researchers recorded a shift in the relative frequency of light emitted by atoms separated by a vertical distance of slightly more than 30 centimeters.

Researchers decreased the atomic density by order of magnitude by using a unique cavity to improve the experiment’s power, reducing the height from centimeters to a few millimeters. Then, they injected 100,000 strontium atoms into the chamber, which they stopped by removing as much heat as possible.

They then adjusted for any non-gravitational effects by measuring the light emitted from the top and bottom of the atom stack. As a result, they got an average that approached the expected outcome if general relativity was accurate after 92 hours of watching these miniature clocks tick.

The difference between the gravitationally redshifted emissions was so small that it set a new record for the minor change that can be detected, providing us a gauge of the process that was 100 times more precise than anything ever recorded.

It’s not a theory-defying result, but it is a lesson in how we can shrink technology to the scale required for ironing out weaknesses in two of physics’ most fundamental concepts.

The study has not yet been submitted for peer review; however, the results are available on arXiv.