You must have heard of this phrase, “Every coin has two faces”. This implies that technology is not a bad thing; rather, it is us who make it the best or the worst. However, with the recent surge in AI and machine learning, a lot of people have started using these acquired skills for malicious purposes, especially in the case of getting opportunities for remote jobs. Covid has transformed the world into a global village, and that’s when the era of remote jobs got a sudden rise. This resulted in a massive surge in AI crimes associated with remote jobs, as reported by the FBI.

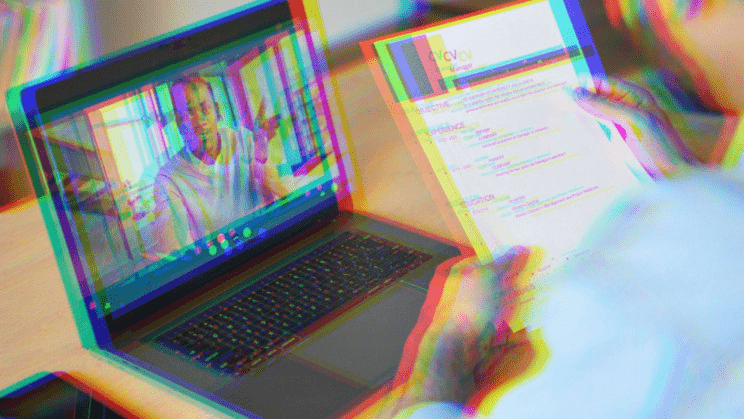

Companies that are offering remote jobs are struggling to select the right candidate for the job as people applying digitally use fake audio or videos during their online interviews. On June 28th, the FBI Internet Crime Centre reported that a lot of complaints have been registered against this technological crime in which people usually steal the “Personally Identifiable Information (PII)” of another person and use it during an interview, thus dodging the interviewers. Not only this, but they also exploit the audio, videos, or recordings of a credible person and pretend to be someone else.

Information technology, computer programming, database, and software-related jobs have become the main course for these dubious individuals. Unfortunately, some of these positions include access to personally identifiable information, financial credentials, and other related databases for a company, which would ultimately harm the customer or the company in one way or the other. But these individuals who manipulate the audio and videos generally don’t perceive that their lip movements and other actions are not aligned with their voice on camera. The coughing, sneezing, and similar movements showed no signs of synchronization with the stolen picture or video during interviews as reported by the FBI.

According to Dr. Matthew Caldwell, “Unlike many traditional crimes, crimes in the digital realm can be easily shared, repeated, and even sold, allowing criminal techniques to be marketed and for crime to be provided as a service. This means criminals may be able to outsource the more challenging aspects of their AI-based crime”. Hence, the phenomenon of “deepfake” is a very serious AI crime threat as it was also published in a study conducted in 2020 in the Crime Science journal.