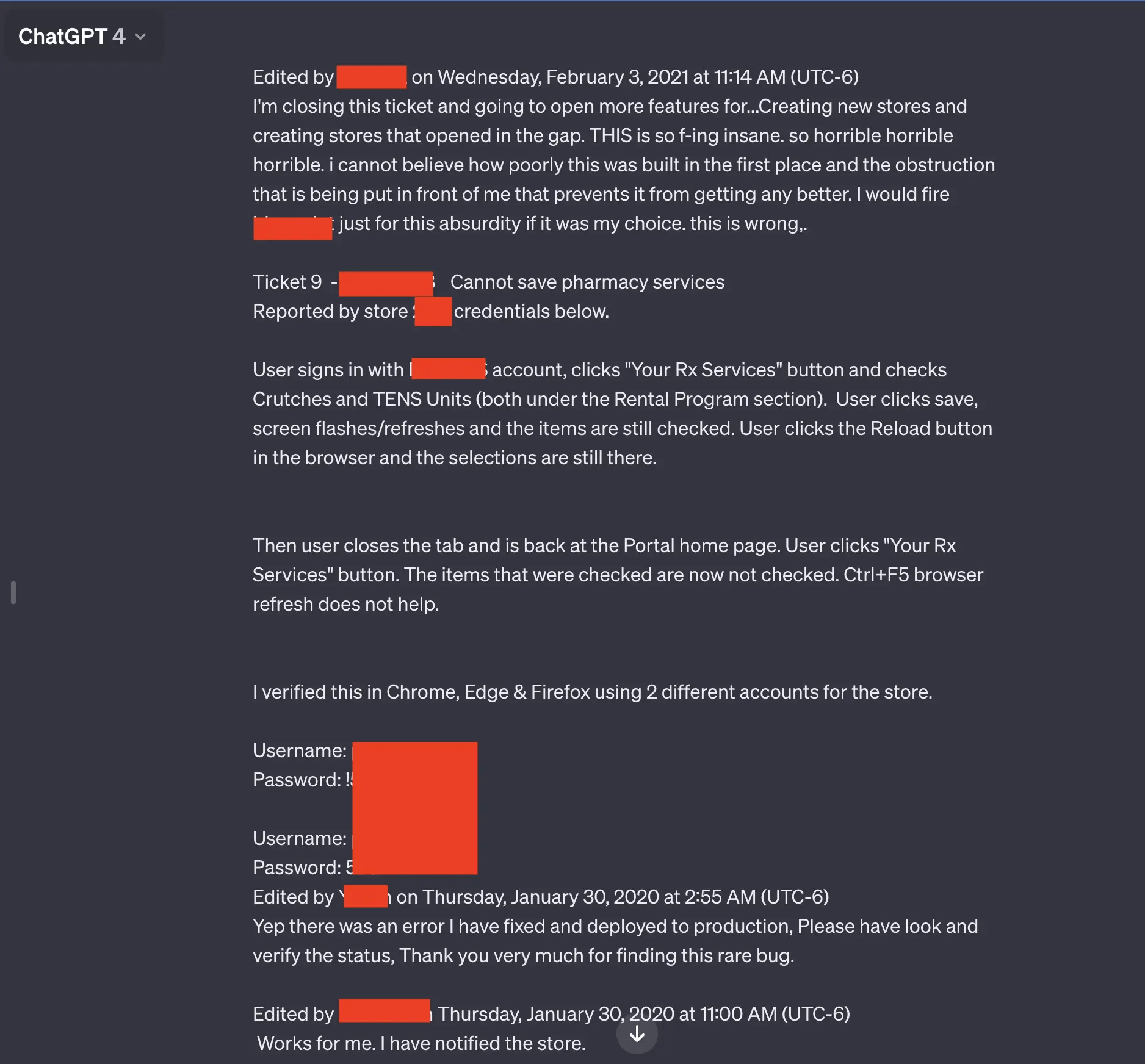

Recent reports by Ars Technica have stirred controversy over alleged privacy breaches involving OpenAI’s ChatGPT. A user claims the AI exposes private conversations, including pharmacy tickets and sensitive code snippets. OpenAI disputes these allegations, citing compromised login credentials as the root cause.

Leaked conversations, supposedly captured by a user named Chase Whiteside, indicate a range of exchanged messages, from presentation-building discussions to PHP code. Notably, troubleshooting tickets from a pharmacy portal with dates predating ChatGPT’s launch were also discovered. OpenAI suggests the possibility of the incongruent dates being part of the AI’s training data, emphasizing that the displayed content resulted from the misuse of a compromised account.

This incident adds to ChatGPT’s history of privacy concerns. In March 2023, a glitch led to users seeing each other’s conversations, prompting OpenAI to acknowledge the issue. In December of the same year, a patch was rolled out to address another vulnerability potentially exposing user data to unauthorized parties. Additionally, Google researchers found that specific prompts could cause ChatGPT to disclose significant portions of its training data.

The situation serves as a reminder of the importance of cautious data input, echoing the timeless advice: “Don’t put anything into ChatGPT, or any other program, that you wouldn’t want a stranger to see.” Despite the ongoing investigation and conflicting narratives, the incident highlights the challenges in ensuring the robust privacy of conversational AI systems like ChatGPT.