The CEO of Nvidia, Jensen Huang, said on Tuesday that Blackwell, the company’s next artificial intelligence graphics engine, will run you between $30,000 and $40,000 a unit.

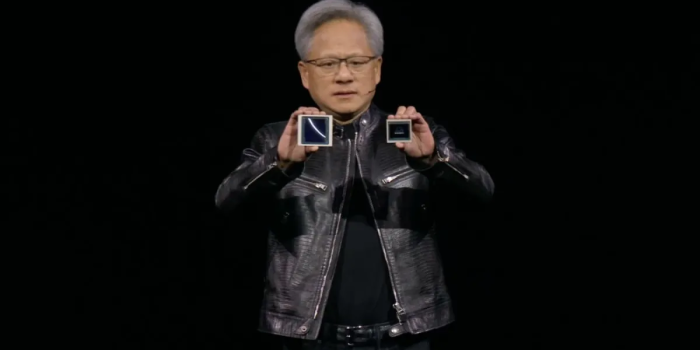

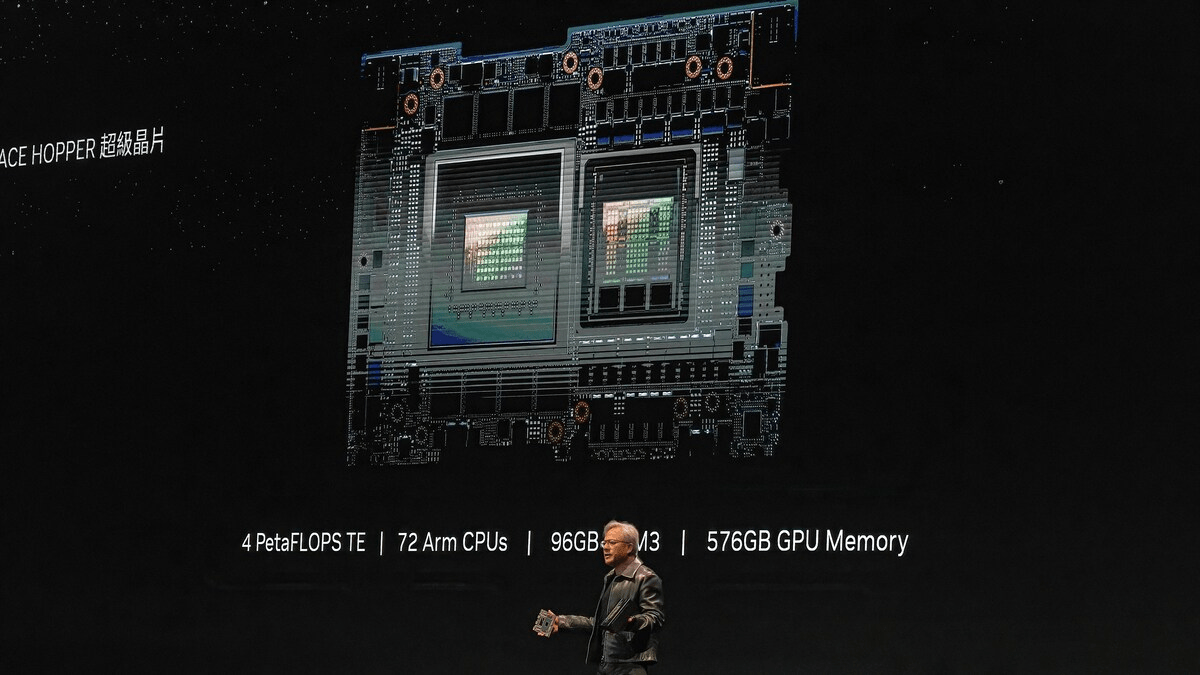

Holding out a Blackwell chip, Huang remarked, “We had to invent some new technology to make it possible.” According to his estimation, Nvidia’s research and development expenses amounted to almost $10 billion.

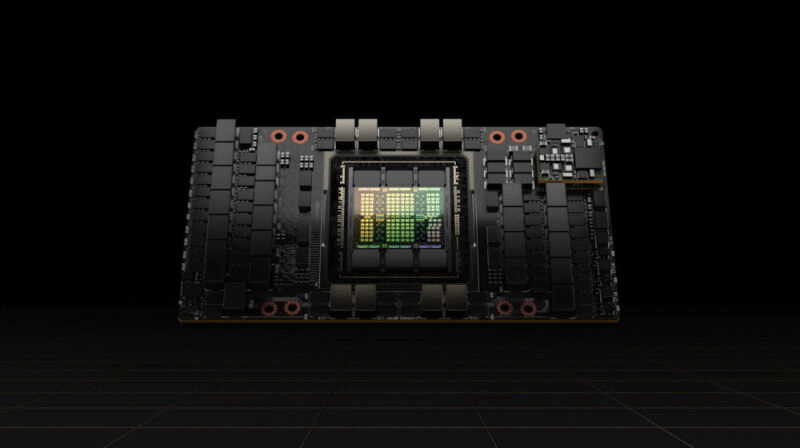

Based on analyst projections, the chip’s pricing indicates that it will be in the same range as its predecessor, the H100, sometimes referred to as the Hopper, which costs between $25,000 and $40,000 per chip. ChatGPT is one example of AI software that is anticipated to be in high demand for training and deployment. When the Hopper generation of Nvidia’s AI processors was released in 2022, the cost had increased significantly over the preceding generation.

Huang then clarified to CNBC’s Kristina Partsinevelos that the price includes building data centers and integrating them with those of other businesses and the chip itself.

Every two years, Nvidia releases the details of a new generation of AI processors. Newer models, like Blackwell, are often quicker and consume less energy. Nvidia takes use of the hype around a new generation to increase sales of GPUs. Blackwell is physically more significant than the previous generation and incorporates two chips.

Since OpenAI’s ChatGPT was unveiled in late 2022, Nvidia’s quarterly sales have tripled thanks to its AI processors. Over the past year, the majority of the leading AI developers and firms have been training their AI models with Nvidia’s H100.

The company does not disclose the list price of Nvidia’s chips, which are available in multiple configurations. The price that an end user, like Microsoft or Meta, may pay varies depending on several factors, including the volume of chips purchased and whether the customer purchases the chips directly from Nvidia through a complete system or through a vendor, like Supermicro, Dell, HP, or other vendors that build AI servers (some servers are constructed with up to eight AI GPUs).