NVIDIA, the renowned technology company, has once again raised the bar in the field of artificial intelligence (AI) with the announcement of its latest supercomputer. The NVIDIA DGX GH200 is set to revolutionize AI model training and potentially reshape the job market as we know it. Promising a staggering performance of 1 exaflop, this new system is a testament to NVIDIA’s relentless pursuit of cutting-edge technology.

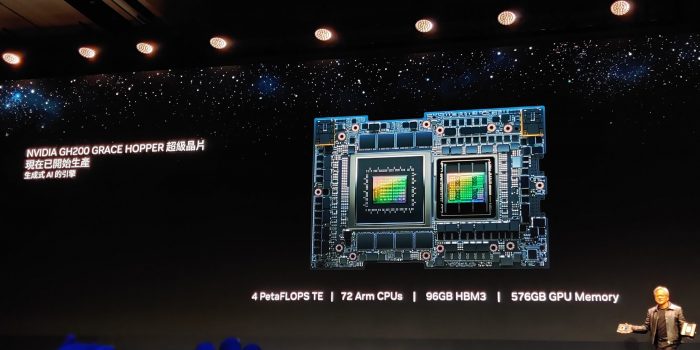

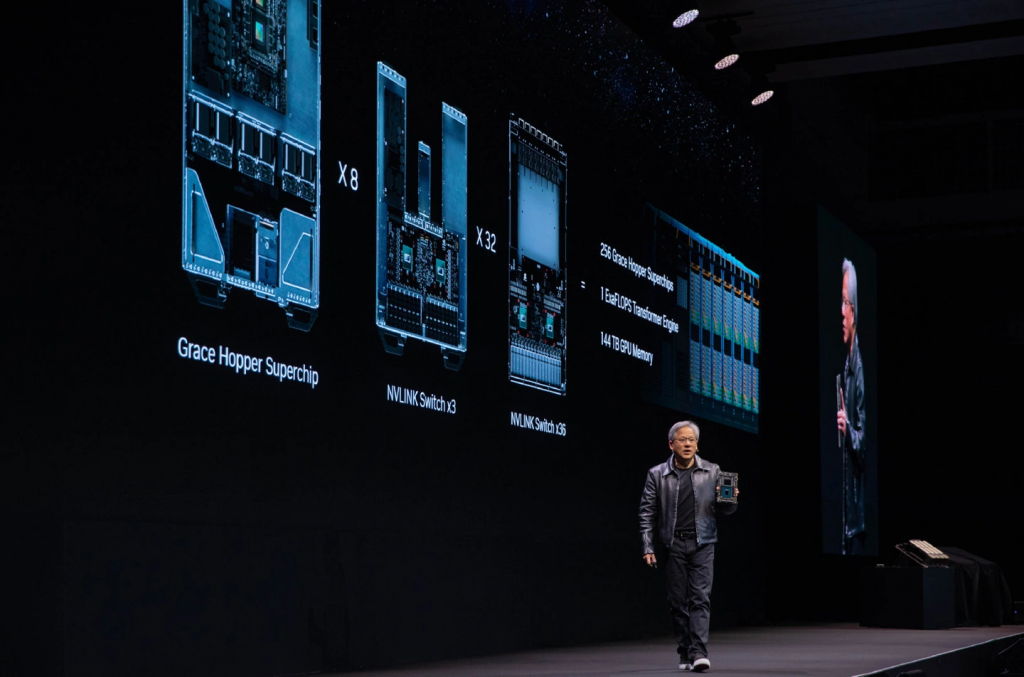

The DGX GH200 is constructed using 256 GH200 “superchips,” providing an astounding 144 TB of shared memory. This amount is an astonishing 500 times greater than its predecessor, the DGX A100, which was unveiled just three years ago. NVIDIA has managed to maximize the system’s potential by integrating its Grace CPU and H100 Tensor Core GPU within each GH200 superchip. This integration allows for communication speeds seven times faster than traditional PCIe connections while consuming only one-fifth of the electricity.

To achieve seamless functionality, NVIDIA connects all the superchips through the NVLink Switch System, creating a unified and powerful GPU system. The resulting supercomputer will be instrumental in training the next generation of AI models, including successors to famous systems like ChatGPT and other large language models. Microsoft, Meta, and Google Cloud are among the first in line to obtain this cutting-edge equipment, and NVIDIA is also planning to build its own supercomputer, named Helios, based on the DGX GH200 technology. Helios will consist of four DGX GH200 systems, comprising a total of 1,024 GH200 superchips and boasting a remarkable performance of 4 exaflops.

Comparisons between the DGX GH200 and current supercomputers should be made with care, it is crucial to emphasize. Although NVIDIA claims a performance of 1 exaflop, this number is based on the less accurate FP8 metric, whereas supercomputers are normally ranked using the double-precision FP64 metric. Helios would produce about 36 petaflops, or 0.036 exaflops, when converted to FP64.

Nevertheless, the power of Helios and the DGX GH200 superchips cannot be overstated. NVIDIA anticipates that these advanced tools will significantly reduce the time required to train AI models, from months to mere weeks. As a result, the job market could experience substantial transformations, compelling humans to adapt and upgrade their skills to remain competitive.

The ongoing developments in AI technology from NVIDIA mark a paradigm leap in the power of computer systems. It is clear that AI will continue to influence our future as we get ready for the development of these supercomputers, making it difficult for us to keep up with the quick advancement of technology.