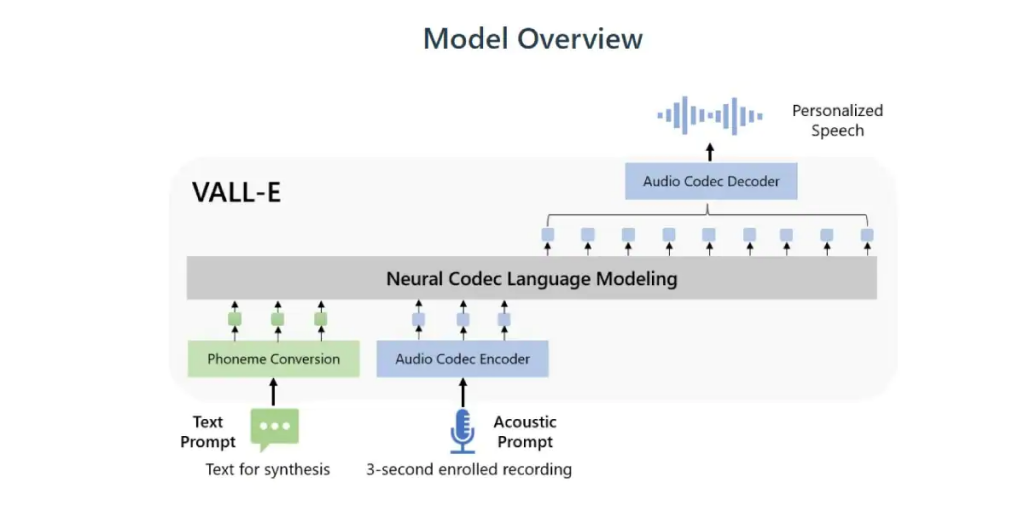

Microsoft researchers revealed on Thursday the VALL-E text-to-speech AI model, which can accurately replicate a person’s voice when given a three-second audio sample.

To synthesize “high-quality personalized speech” from any unknown speaker, the VALL-E language model was trained using 60,000 hours of English speech from 7,000 distinct speakers.

Once the artificial intelligence system gets a recording of a person’s voice, it can make it sound like that person is saying anything. It may even mimic the original speaker’s emotional tone and acoustic surroundings.

“Experiment results show that VALL-E significantly outperforms the state-of-the-art zero-shot text-to-speech synthesis (TTS) system in terms of speech naturalness and speaker similarity,” a paper describing the system stated.

“In addition, we find VALL-E could preserve the speaker’s emotion and acoustic environment of the acoustic prompt in synthesis.”

Applications include authors reading whole audiobooks from a single sample recording, videos with natural language voiceovers, and substituting speech for actors in films if the original recording is damaged.

According to Microsoft, the VALL-E software used to generate the fake speech is not currently available for public use due to “potential risks in abuse of the software, such as spoofing voice identification or impersonating a specific speaker.”

To minimize these dangers, Microsoft announced that it would also develop VALL-E by its Responsible AI Principles and look at potential methods for recognizing synthetic speech.

Microsoft used public domain voice recordings, particularly those from LibriVox audiobooks, to train VALL-E. However, the mimicked speakers voluntarily took part in the study.

“When the model is generalized to unseen speakers, relevant components should be accompanied by speech editing models, including the protocol to ensure that the speaker agrees to execute the modification and the system to detect the edited speech,” Microsoft researchers said in a statement.