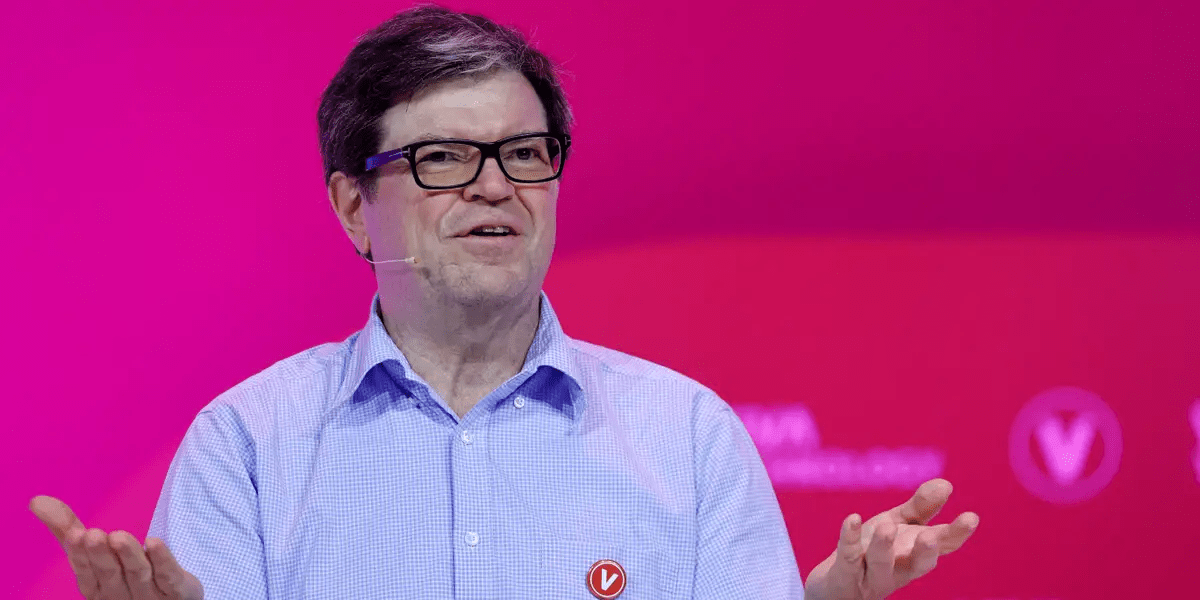

In a recent interview, Meta AI chief Yann LeCun expressed confidence that terrorists and criminals won’t be able to exploit open-source AI systems to wreak havoc on the world. LeCun argued that the sheer magnitude of resources required for such an endeavor makes it highly implausible.

According to LeCun, executing such a nefarious plan would demand access to 2,000 Graphics Processing Units (GPUs) in a location undetectable by authorities, substantial funding, and a team of highly skilled individuals capable of carrying out the task. This statement highlights the substantial barriers that would prevent malicious actors from taking control of open-source AI systems.

LeCun extended his skepticism to even wealthy states, asserting that they would likely face insurmountable challenges in attempting to control open-source AI. He noted that even a technologically advanced nation like China might encounter difficulties due to existing embargoes, such as the US export bans on AI chips.

Meta has been a fervent advocate for an open-source approach to AI development, releasing Llama 2, a predominantly open-source AI model, in July. The company strengthened its commitment to open innovation by forming an alliance with IBM on December 5, with the shared goal of supporting open science and innovation in AI. This strategy distinguishes Meta from competitors like OpenAI and Google, who have embraced closed models.

LeCun’s dismissive stance on AI as an existential threat echoes his previous statements. In June, he labeled claims of AI taking over the world as “preposterously ridiculous,” emphasizing that such concerns are projections of human nature onto machines.

While Meta’s approach to open-source AI development has received both support and skepticism from the tech community, Yann LeCun remains steadfast in his belief that the formidable barriers in place make it unlikely for terrorists or criminals to exploit open-source AI systems on a global scale.