A team of researchers from Cambridge University’s Department of Computer Science and Technology has successfully created a robot which can copy human emotions. This implies that humans have crossed into the final frontier of artificial intelligence (AI) research and development (R&D). The robot is named Charles and it can scan and interpret several expressions which are written on a person’s face. The process takes only a few seconds and starts with a camera capturing images of a person’s face.

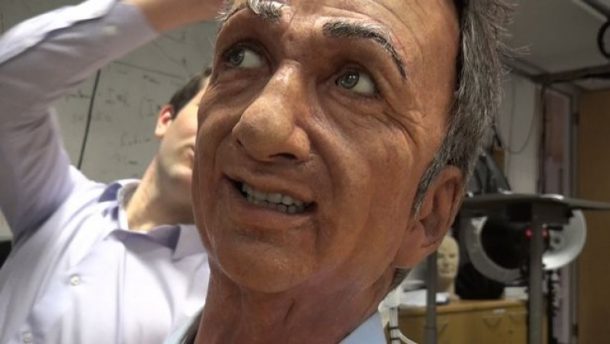

After capturing the images, the data is transferred to a computer for analysis of facial expressions. Charles then closely matches the subject’s facial’s expressions. The robot looks like a very friendly creation from a Hollywood special effects studio. He has a large humanoid expressive face and eyes. This is because of the high-quality prosthetics which went into his design.

Cambridge University Professor Peter Robinson explained about their robot saying, “We’ve been interested in seeing if we can give computers the ability to understand social signals, to understand facial expressions, the tone of voice, body posture and gesture. We thought it would also be interesting to see if the computer system, the machine, could actually exhibit those same characteristics, and see if people engage with it more because it is showing the sort of responses in its facial expressions that a person would show. So we had Charles made.”

The reason behind the production of Charles is that the researchers want to use Charles as a tool for analyzing the perceptions of robotics and robots by the public. He said, “The more interesting question that this work has promoted is the social and theological understanding of robots that people have. Why do, when we talk of robots, always think about things that look like humans, rather than abstract machines, and why are they usually malicious?”

Charles is able to project a lot of emotions including shock, fear, and anger. It can also capture some subtle and complex expressions like arrogance and grumpiness. It can detect these intended emotions without any prompts or indications. It also shows that in terms of the future of emotional robot, R&D has the larger task of creating a platform for understanding the surface of the human heart and hidden deep emotions. However, for now, we are content with the capabilities and potential of Charles.