Artificial Intelligence (AI) has revolutionized how we live and work, from smartphones to autonomous vehicles. However, while current AI systems can perform complex tasks, they are limited in comprehending the world as humans do. Artificial General Intelligence (AGI), a machine intelligence that can understand and learn any intellectual task a human can, is the next step in AI’s evolution.

In this context, the recent comments by Demis Hassabis, CEO of Google’s DeepMind, that AGI might be developed within a decade have sparked considerable interest and debate in the technology industry. However, some experts are worried that AGI could pose a severe threat to society if not controlled and guided by human values.

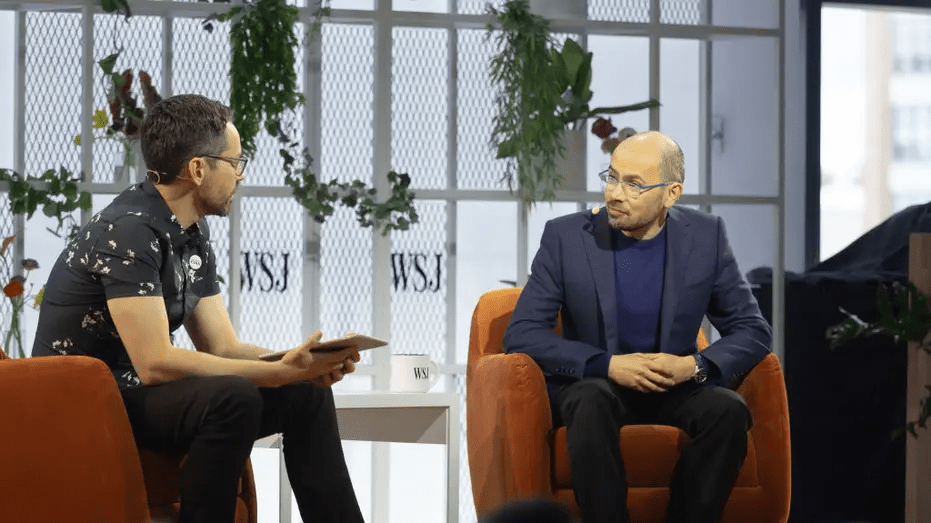

Hassabis announced while speaking at a conference organized by the Wall Street Journal. He believes that AGI advancement might accelerate, and we could be just a few years away from developing competent, very general systems.

The progress in the last few years has been phenomenal, and there’s no reason to believe it will slow down.

In contrast to generative AI, which focuses on producing text or images based on specific prompts, AGI is aware of what it says and does, reducing the likelihood of making incorrect or ambiguous statements. However, almost 58% of AI professionals in a study conducted by Stanford University consider AGI an “important concern,” and 36% said it may result in a “nuclear-level catastrophe.”

Elon Musk and Steve Wozniak, co-founders of Apple, were among those who signed an open letter calling for a six-month moratorium on developing advanced AI to emphasize ethics research.

Despite these concerns, Hassabis emphasized that DeepMind’s AGI research would not endanger society anytime soon. The company’s current goal is to incorporate AI into more goods, which would be as revolutionary as the first iPhone.

Also, Hassabis stated that Google would responsibly create AGI and apply the scientific method to “do carefully controlled experiments to understand what the underlying system does.”

DeepMind, which Google purchased in 2014, has already released over 200 million predictions after analyzing the structure of nearly every protein known at the time in a free-to-access database. In addition, the company created technologies that reduced Google’s energy costs by 40% and AI capable of identifying complicated eye disorders.

AlphaFold, an AI system developed by DeepMind, predicted the structure of every protein known in the human genome, which has far-reaching implications for studying illness and therapeutics.

The development of AGI has the potential to change the world as we know it, but it also comes with significant ethical concerns. While experts predict that AGI may be developed in the coming years, it is essential that the technology industry addresses these concerns and ensures that human values guide AGI. Transparency, accountability, and ethical standards are paramount in developing AGI to mitigate the potential risks associated with this technology.

Therefore, it is imperative that stakeholders from various fields, including technology, academia, and government, work together to establish guidelines for the development and deployment of AGI. We can only ensure that AGI benefits humanity by addressing these concerns while minimizing its potential negative impact.