In a bid to address concerns related to the potential misuse of AI-generated content, Google has taken a significant step by embedding watermarks in audio created using its DeepMind’s AI Lyria model. The watermark, known as SynthID, serves as a unique identifier, allowing users to trace the AI-generated origins of music tracks after the fact.

This move by Google aligns with the broader industry trend of developing safeguards against the potential harms of generative AI. The increasing prevalence of AI-generated content, including music, has raised concerns about the potential for misinformation, copyright infringement, and other malicious uses. President Joe Biden’s executive order on artificial intelligence has specifically highlighted the need for government-led standards for watermarking AI-generated content, emphasizing the importance of accountability and traceability.

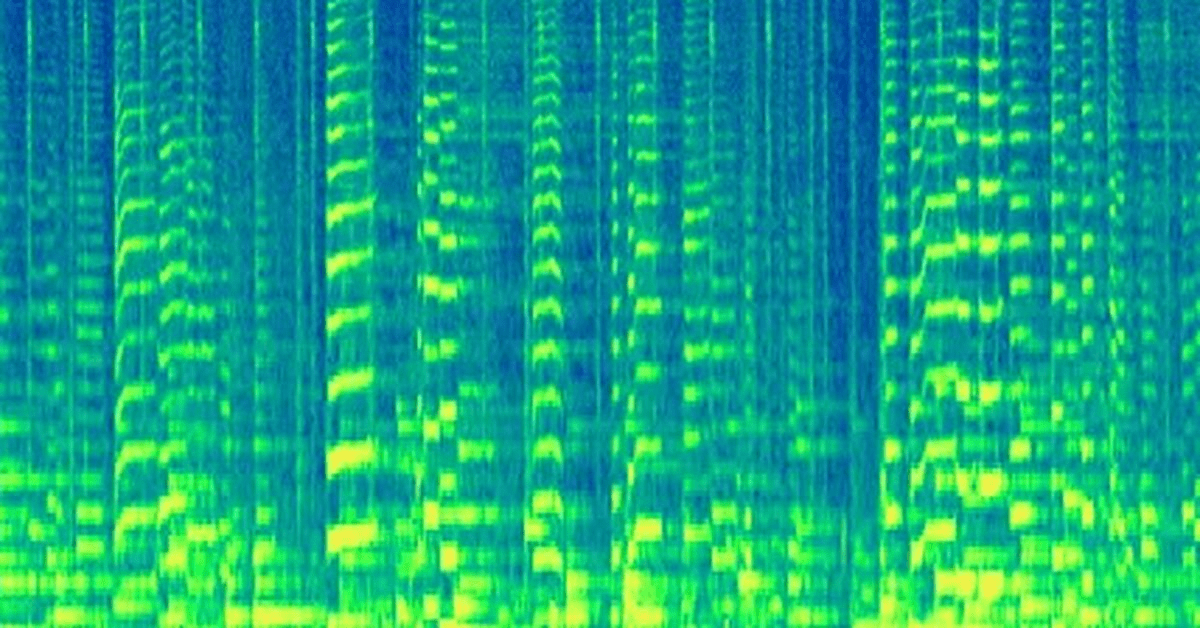

According to DeepMind, the technology behind SynthID’s audio implementation involves converting the audio wave into a two-dimensional visualization that illustrates how the spectrum of frequencies in a sound evolves over time. This innovative approach claims to be distinct from existing methods, providing a novel means of watermarking AI-generated audio content.

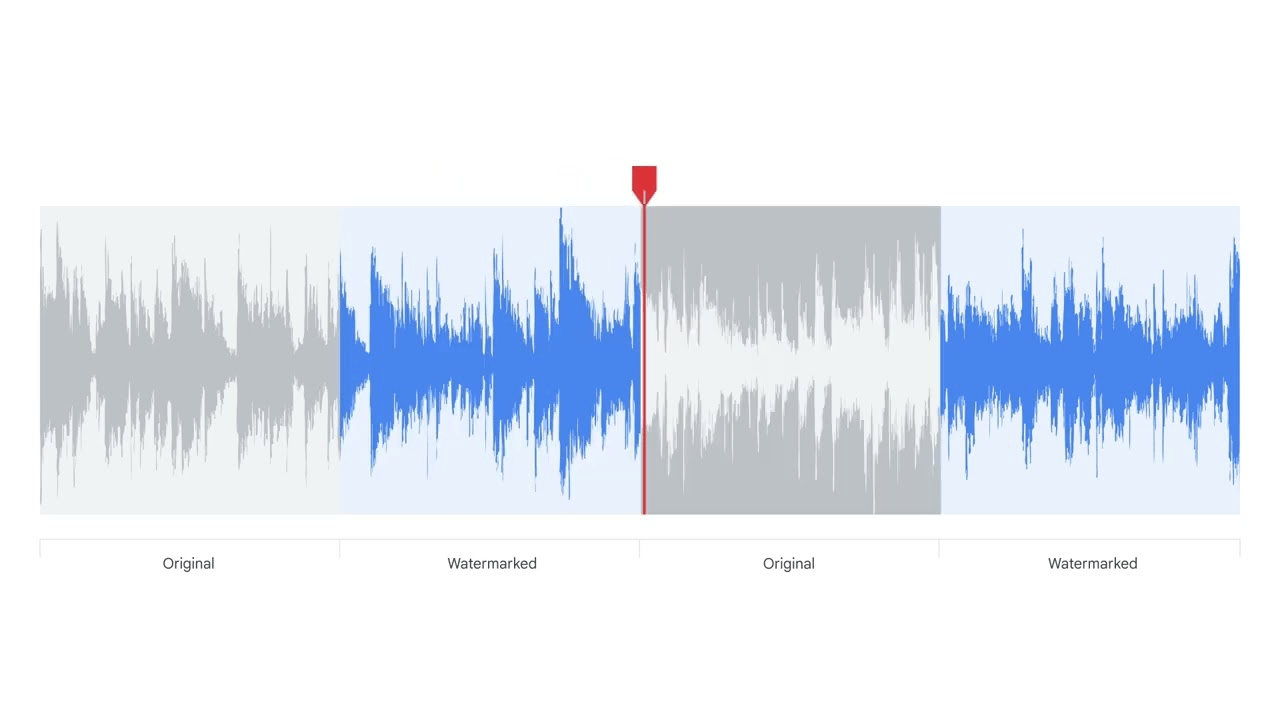

One notable aspect of SynthID is its purported transparency in the listening experience. DeepMind asserts that the watermark is imperceptible to the human ear and remains detectable even when subjected to common alterations such as compression, speed adjustments, or the addition of extra noise.

Google’s integration of SynthID into AI-generated music follows the recent beta release of SynthID for images created by Imagen on Google Cloud’s Vertex AI. The watermarking technology aims to resist editing techniques like cropping or resizing, although DeepMind acknowledges that it may not be foolproof against extreme image manipulations.

While watermarking tools like SynthID represent a promising step toward safeguarding against the potential misuse of AI-generated content, it is essential to recognize that current technologies are not foolproof. The evolving landscape of generative AI demands ongoing efforts to refine and enhance such safeguards to effectively address emerging challenges in content authenticity and integrity.