Google has announced its latest artificial intelligence (AI) supercomputer, which the company claims is faster and more efficient than the competing systems offered by Nvidia. While Nvidia dominates the market for AI model training and deployment, with over 90%, Google has been designing and deploying its own AI chips called Tensor Processing Units, or TPUs, since 2016.

Google has been a major AI pioneer, with its employees developing some of the most important advancements in the field over the last decade. However, the company has been racing to release products and prove it hasn’t fallen behind in commercializing its inventions, a “code red” situation, as CNBC previously reported.

To train AI models such as Google’s Bard or OpenAI’s ChatGPT, it takes a lot of computers and hundreds or thousands of chips to work together. These computers run around the clock for weeks or even months, making the substantial amount of computer power needed for AI expensive.

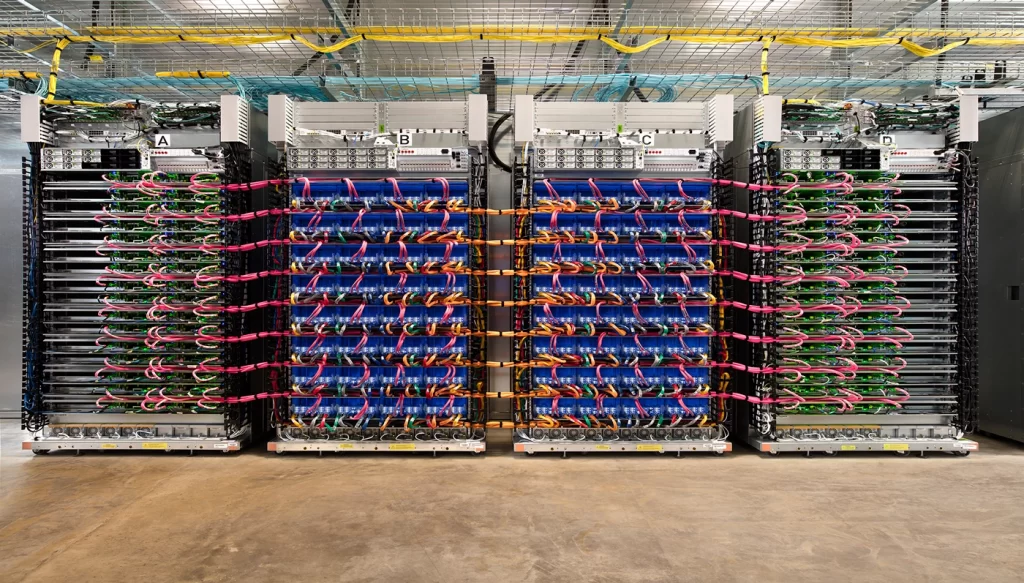

On Tuesday, Google announced that it had built a system with over 4,000 TPUs joined with custom components designed to run and train AI models. Its TPU-based supercomputer, called TPU v4, is “1.2x–1.7x faster and uses 1.3x–1.9x less power than the Nvidia A100,” according to Google researchers.

Google’s TPU results were not compared with Nvidia’s latest AI chip, the H100, as it is more recent and was made with more advanced manufacturing technology, the Google researchers said. Results and rankings from an industrywide AI chip test called MLperf were released on Wednesday, with Nvidia CEO Jensen Huang claiming that the results for the most recent Nvidia chip, the H100, were significantly faster than the previous generation.

The substantial amount of computer power needed for AI is expensive, and many in the industry are focused on developing new chips, components such as optical connections, or software techniques that reduce the amount of computer power needed. The power requirements of AI are also a boon to cloud providers such as Google, Microsoft and Amazon, which can rent out computer processing by the hour and provide credits or computing time to startups to build relationships.

In conclusion, Google’s latest AI supercomputer represents a major step forward for the company, which is seeking to prove it hasn’t fallen behind in commercializing its AI inventions. However, Nvidia still dominates the market for AI model training and deployment, with its latest chip delivering significant performance improvements compared to the previous generation. The substantial amount of computer power needed for AI is also driving development of new chips and software techniques to reduce power requirements, while cloud providers are benefiting from the growing demand for AI processing power.