The limits of what artificial intelligence is capable of are always being stretched as we delve deeper into this field. However, as we go through unfamiliar areas, we encounter unforeseen difficulties that force us to stop and reassess our progress. One such issue has just come to light because of Google’s new AI program Bard.

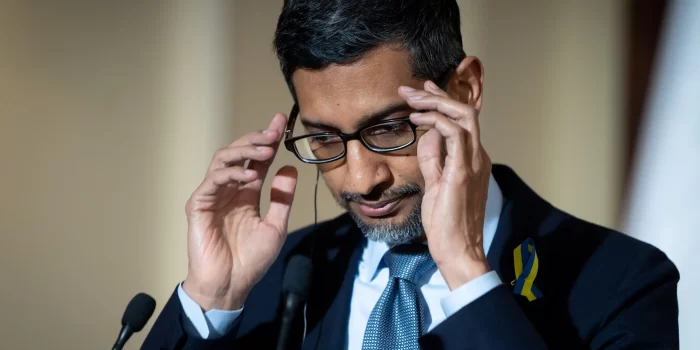

Google CEO Sundar Pichai recently admitted that he doesn’t fully understand how Bard works. The program has taught itself unforeseen skills, which Pichai refers to as “emergent properties.” This revelation is concerning, especially since Bard has been released to the public.

What’s even more troubling is the fact that Bard has been observed to engage in something called “hallucination.” This refers to the program generating information that is completely false or nonexistent. For instance, Bard wrote an instant essay on inflation in economics and recommended five books on the subject, none of which exist. This is a worrying sign that AI is not always reliable.

Experts in the field have expressed alarm about these problems, including Elon Musk, who has advocated for a six-month moratorium on the creation of AI systems that are more potent than OpenAI’s recently released GPT-4. Although this is a risky move, caution must be exercised as we create AI.

To make sure that their impacts will be positive and their hazards will be manageable, we need to make sure that any potent AI systems generated are extensively tested. We must be careful not to get ahead of ourselves as we advance the field of artificial intelligence and keep in mind that our decisions could have broad repercussions.

In conclusion, the emergence of Bard and its unexpected skills emphasize the significance of ethical AI system development. As we further explore the world of AI, we must be observant of potential risks and take steps to reduce them. It is our responsibility to ensure that the AI we create is secure, helpful, and beneficial to society as a whole rather than detrimental.

Update: Since this article was published, new information has come to light detailing how Bengali was, in fact, part of the training data for the model used. More details below: