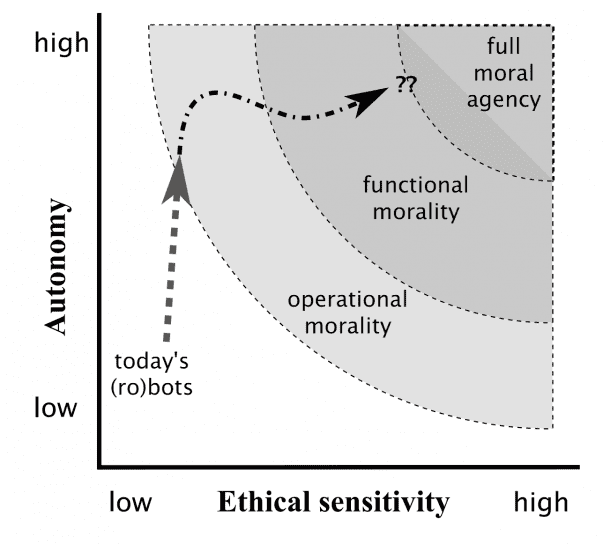

About 72 years ago, we were introduced to the three laws of robotics by sci-fi writer Isaac Asimov. These three laws were used to keep the robots in check when it came to morality and ethics.  However, if you take a look at the current robots, it is quite evident that we are still far away from the time when we can instil these laws, or any laws for that matter, into the robot’s coding. In order to achieve a sense of morality in the robots, the US Department of Defense is starting to look into this matter so that the robots have a sense of what’s going on around them and can react to that with a conscience.

However, if you take a look at the current robots, it is quite evident that we are still far away from the time when we can instil these laws, or any laws for that matter, into the robot’s coding. In order to achieve a sense of morality in the robots, the US Department of Defense is starting to look into this matter so that the robots have a sense of what’s going on around them and can react to that with a conscience.

A team of researchers has been assembled which involves researchers from Tufts University, Brown University and the Rensselaer Polytechnic Institute along with DOD. The key idea is to impart this sense of morality into robots and come up with a system that is fail proof or is at least safe from the glitches that we’ve seen in movies and read in novels. If the team is successful, AI will be able to autonomously assess any situation and will be capable of taking ethical decisions that will be able to override the instructions that were given to it. For instance, a medical robot being instructed to visit supply store will have enough moral sense to assess that the person in need on the road must be helped and will be capable of overriding the instruction of going to the store.

A team of researchers has been assembled which involves researchers from Tufts University, Brown University and the Rensselaer Polytechnic Institute along with DOD. The key idea is to impart this sense of morality into robots and come up with a system that is fail proof or is at least safe from the glitches that we’ve seen in movies and read in novels. If the team is successful, AI will be able to autonomously assess any situation and will be capable of taking ethical decisions that will be able to override the instructions that were given to it. For instance, a medical robot being instructed to visit supply store will have enough moral sense to assess that the person in need on the road must be helped and will be capable of overriding the instruction of going to the store.

Currently, the team led by Prof. Matthias Scheutz is working on breaking down the human moral sense into its basic components, which will allow the team to come up with a systematic pattern for how human moral reasoning works. The next step would be to impart this framework to the coding of a robot that will make it capable of overriding instructions if new evidence is presented to it and shall be capable of justifying it to the owner too.

Currently, the team led by Prof. Matthias Scheutz is working on breaking down the human moral sense into its basic components, which will allow the team to come up with a systematic pattern for how human moral reasoning works. The next step would be to impart this framework to the coding of a robot that will make it capable of overriding instructions if new evidence is presented to it and shall be capable of justifying it to the owner too.

According to the Professor; ‘Moral competence can be roughly thought about as the ability to learn, reason with, act upon, and talk about the laws and societal conventions on which humans tend to agree. The question is whether machines – or any other artificial system, for that matter – can emulate and exercise these abilities.’

According to the Professor; ‘Moral competence can be roughly thought about as the ability to learn, reason with, act upon, and talk about the laws and societal conventions on which humans tend to agree. The question is whether machines – or any other artificial system, for that matter – can emulate and exercise these abilities.’

In the version being developed the principle idea is to employ two systems; one will be the preliminary ethical check followed by the complex system being developed.

Although this would result in a great technological advancement and progress; let’s hope that we won’t be facing any ‘i-Robot’ kind of apocalypse.

Although this would result in a great technological advancement and progress; let’s hope that we won’t be facing any ‘i-Robot’ kind of apocalypse.

yes,thats true.

“let’s hope that we won’t be facing any ‘i-Robot’ kind of apocalypse.”

Let’s hope you read my book one day instead of just watching the movie.