A human player has utterly crushed a top-ranked AI system in the board game Go, marking an unexpected reversal of the 2016 computer victory that was regarded as a turning point in the evolution of artificial intelligence.

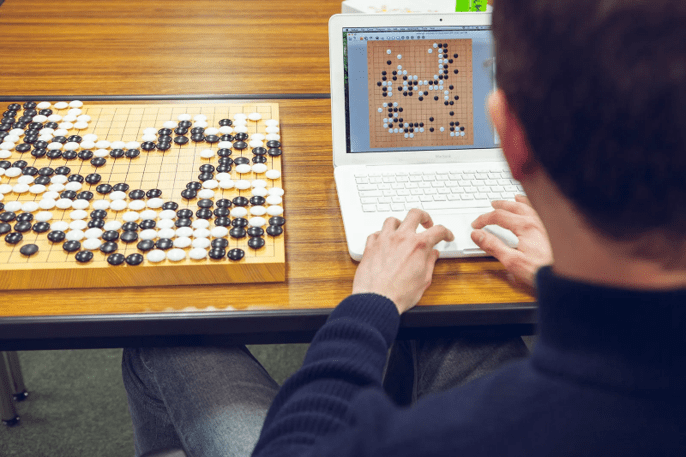

American gamer Kellin Pelrine defeated the computer by taking advantage of a loophole that another computer had previously missed. However, no direct computer help was used during the head-to-head competition, in which he won 14 out of 15.

The recent win revealed a flaw familiar to most of today’s widely used AI systems, including the ChatGPT chatbot developed by San Francisco-based OpenAI.

The tactics that restored human dominance on the Go board were recommended by a computer program that had examined the AI systems in search of weaknesses. Pelrine then mercilessly executed the suggested plan.

“It was surprisingly easy for us to exploit this system,” said Adam Gleave, chief executive of FAR AI, the Californian research firm that designed the program. To uncover a “blind spot” that a human player may exploit, the software played over 1 million games against KataGo, one of the best Go-playing computers.

According to Pelrine, a player of intermediate skill level might use the winning approach provided by the program to beat the computers because it “is not extremely trivial, but it’s not super-difficult” to master. He also used the method to defeat one of the best Go systems, Leela Zero.

According to Stuart Russell, a computer science professor at the University of California, Berkeley, detecting a fault in some of the most advanced Go-playing machines hints at a fundamental issue in the current deep-learning systems.

He stated that the algorithms could “understand” only specific situations to which they have been exposed in the past and cannot generalize in a way that humans find easy.

“It shows once again we’ve been far too hasty to ascribe superhuman levels of intelligence to machines,” Russell said.

According to the researchers, precisely explaining the Go-playing systems’ failure is crucial. One possible reason is that the approach used by Pelrine is rarely used, implying that the AI systems had not been trained on enough similar games to recognize their vulnerability, according to Gleave.

He observes that flaws in the systems are pervasive when AI systems are subjected to the same kind of “adversarial attack” used against Go-playing computers.

Regardless, “we’re seeing extensive AI systems deployed at scale with little verification.”