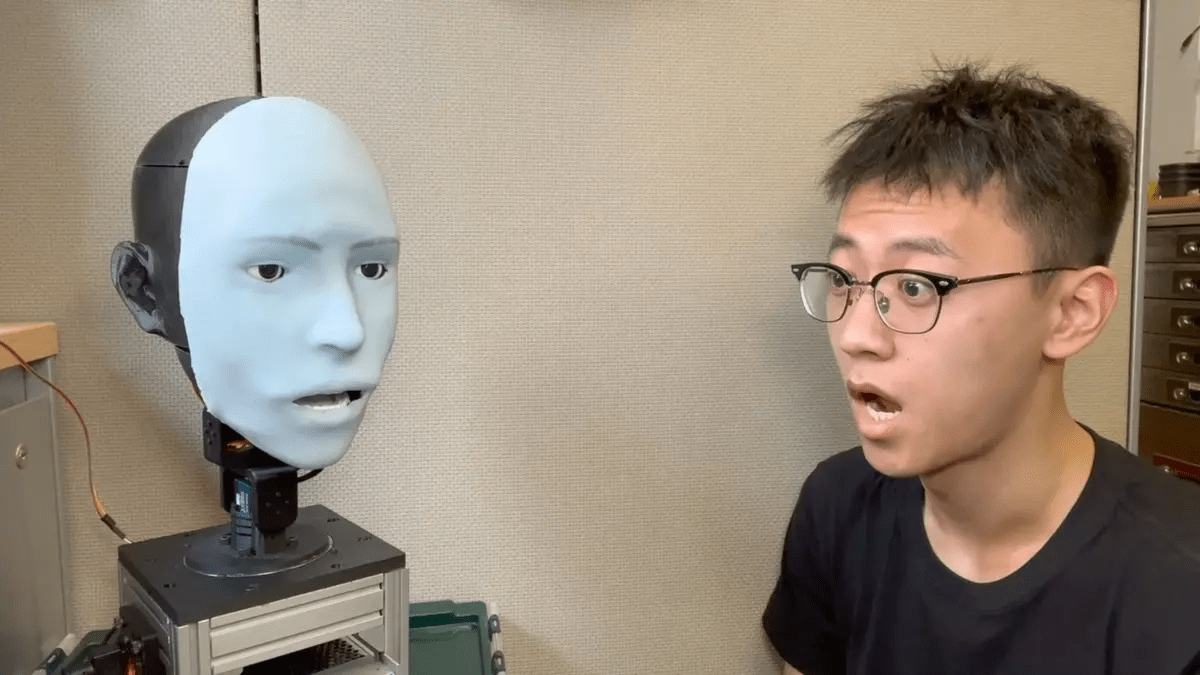

A smile is one of the most charming gestures there is. In robotics, anticipating facial expressions is highly important for human-robot interaction. Most robots can only recognize and react to human emotions after the human has completed expressing them. Many robotic faces can mimic human facial expressions while interacting with humans, although the robots frequently exhibit delayed expressions.

Aiming to advance such robotic technologies and make them more user-friendly, a team of researchers from Columbia University has created a robot named Emo. This anthropomorphic facial robot can predict a human smile 839 milliseconds in advance and can concurrently animate its face in a smile. This simultaneous smiling behavior, according to researchers, is a step toward making physical humanoid robots seem more authentic and natural to people. Rapid advancements in robotic verbal communication are made possible by large language models, but nonverbal communication is not keeping up.

Rather than being organic, these expressions are typically meticulously preprogrammed, calibrated, and planned. A step toward developing more humanlike interactions, recent advances in face robotics have concentrated on expanding and enhancing dynamic facial displays of emotion.

Researchers highlight that complex hardware and software designs are needed for face animatronics. While previous research has produced remarkable face robots that resemble humans, these primarily rely on preprogrammed facial movements. “The challenge is twofold: First, the actuation of an expressively versatile robotic face is mechanically challenging. A second challenge is knowing what expression to generate so that the robot appears natural, timely, and genuine,” said the study.

Eva, the group’s previous robot platform, was one of the first to be able to self-model its facial expressions. However, to accomplish more believable social contact, the robot needed to be able to anticipate the conversational agent’s facial expression in addition to its own.

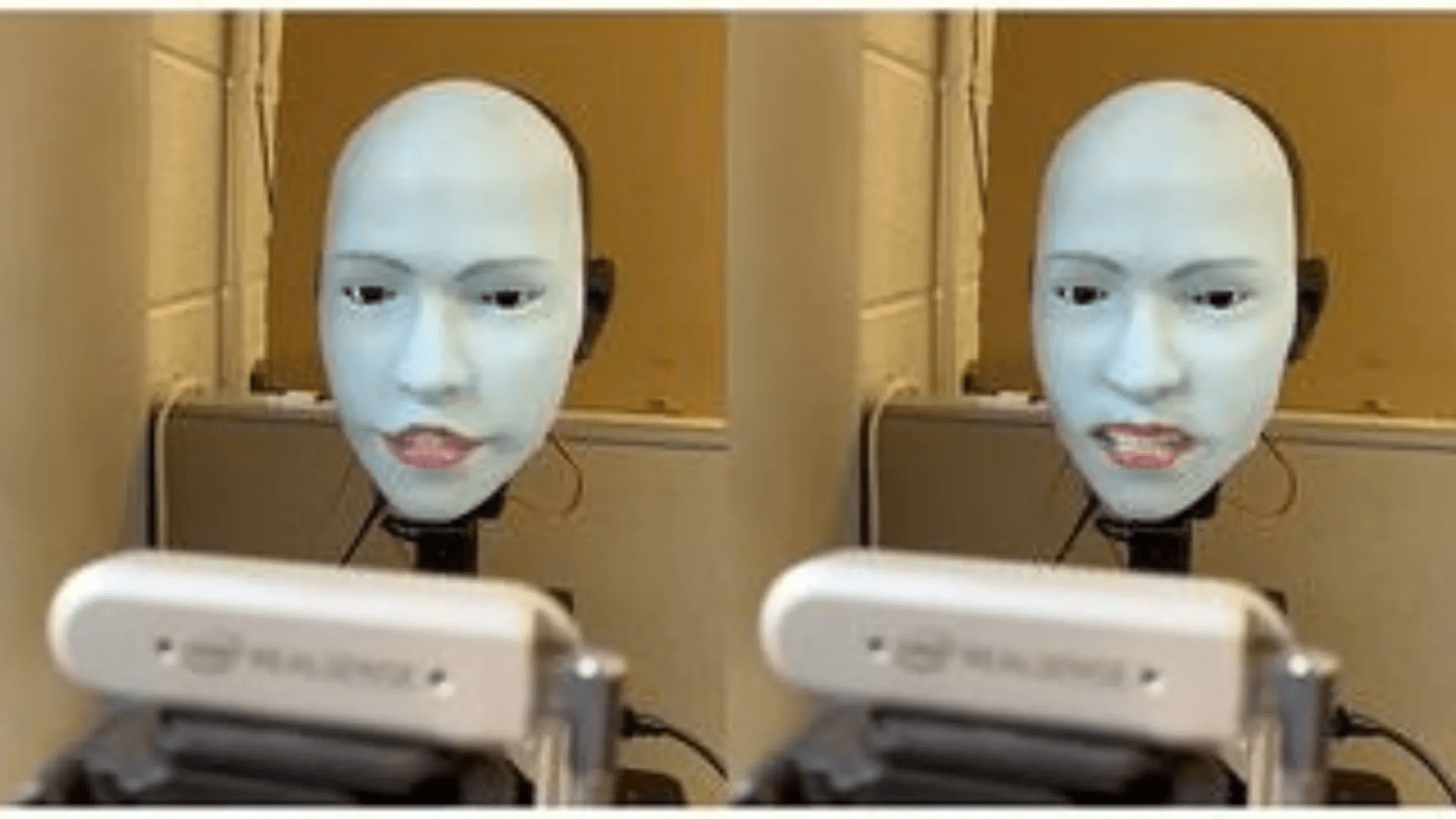

That led the team to develop Emo, an advanced anthropomorphic facial robot that surpassed its predecessor, Eva. With 26 actuators, the robot offers asymmetrical expressions via direct-attached magnets, enhancing precision. Furthermore, embedded high-resolution cameras in its eyes facilitate humanoid visual perception, aiding in predicting conversant facial expressions.

According to researchers, Emo employs a dual neural network framework to predict both its own and the conversant’s facial expressions, enabling real-time coexpression. With 23 facial expression motors and three for neck movement, Emo’s hardware upgrades significantly enhance interaction capabilities, and an upgraded inverse model allows for five times faster motor command generation.

Through mirror-generated facial representations, the initial neural network supported the robot in predicting its own emotional facial expressions by interpreting the motor commands from its hardware. Meanwhile, the second network underwent training to predict the facial expressions of a human interlocutor during a conversation.

The researchers executed the applications using a widely available laptop computer (MacBook Pro 2019, Intel Core i9). They transmitted the motor commands to Emo, saving additional processing resources for future tasks like speaking, listening, and thinking. The results showed that a robot has the capability to anticipate a forthcoming smile approximately 839 milliseconds before its occurrence in humans. Moreover, employing a learned inverse kinematic facial self-model, the robot can synchronize its own smile with the humans in real-time. This proficiency was demonstrated using a robot face equipped with 26 degrees of freedom.

Researchers claim the outcomes demonstrate that the predictive model can accurately predict a wide range of human target facial expressions and can produce the anticipated expression far enough in advance to provide the mechanical apparatus enough time to activate.

“By pinpointing the specific features that facilitate or hinder human user engagement, such feedback could then be used to improve Emo’s social communication skills for both general use and bespoke applications, including cross-cultural interactions, thereby enhancing its utility, accessibility, and marketability,” said Rachael E Jack from the University of Glasgow, in a statement.

Researchers now propose to look into how different cultural contexts interpret the robot’s ability to anticipate facial expressions.