Deepmind researchers recently announced the release of Transframer, a new general-purpose framework for image modeling and vision applications based on probabilistic frame prediction. This new AI tool unites a variety of tasks, such as image segmentation, view synthesis, and video interpolation.

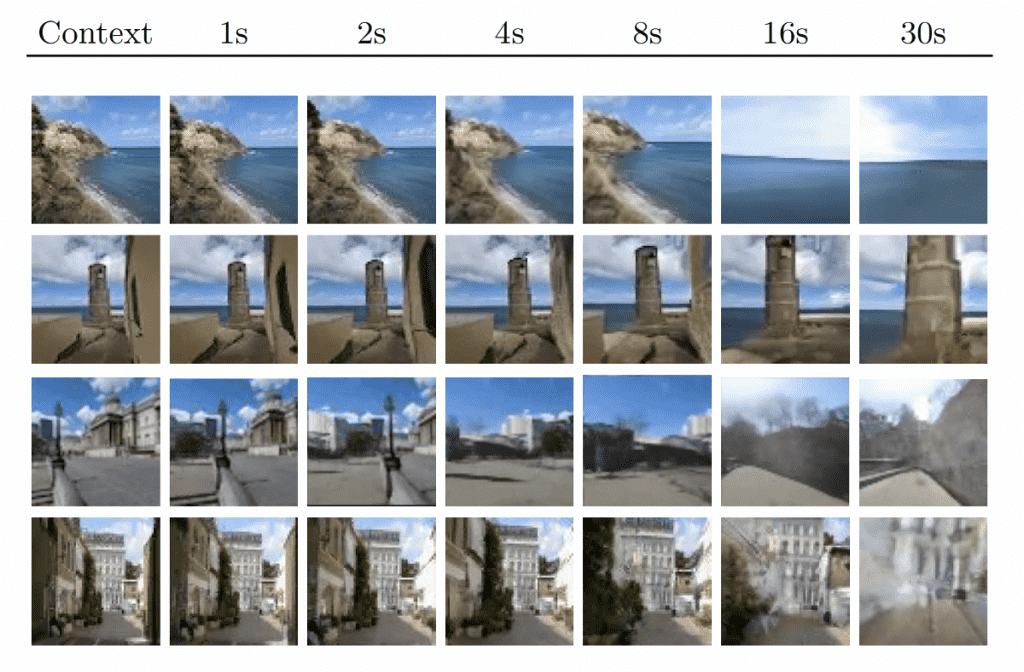

Transframer combines a variety of image modeling and vision tasks and may generate films or image characteristics based on a single image with one or more context frames. It operates on several video generation benchmarks. The study team says it is a cutting-edge model that can build coherent 30-second videos from a single image and is projected to be the strongest and most competitive on a few-shot view synthesis.

The potential for whole video games based on machine learning techniques rather than conventional rendering is explored under this model. It appears capable of using artificial depth perception and perspective to generate what the image would look like if someone moved around it.

Despite the lack of task-specific architectural components, the suggested model also performed well on eight other tasks, including semantic segmentation, picture classification, and optical flow prediction.

Transframer can also be utilized in various applications that involve learning conditional structure from text or a single image, such as video model prediction and generation, novel view synthesis, and multi-task vision.