Researchers at MIT have done an outstanding job as they have just introduced an unusual marvel in the field of artificial intelligence. They finally accomplished the breakthrough of developing “analog programmable resistors,” which are going to be one million times faster than the conventional transistors used in digital processes, as reported in the press release. Not only this, but the study also claimed that these analog synapses would be even faster than the neural network found in human brains. This is exciting, isn’t it?

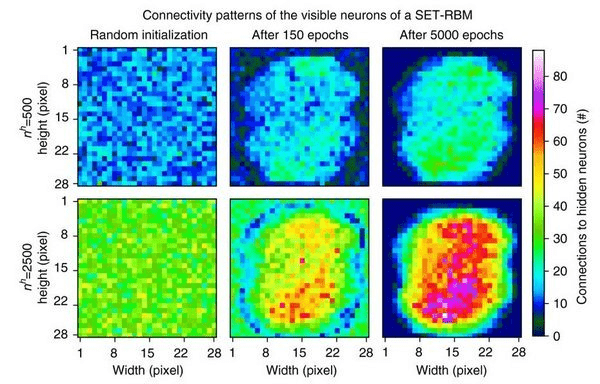

The whole mechanism is based on the neat idea of putting the analog resisters in the right configuration to reap their benefits in the domain of deep learning specifically. We know that transistors have been incorporated into digital processors for their linkage of networks, but this incredible system has just flipped the script. It uses the resistors in their exact specific positions to foster the smooth networking of analog synapses and neurons.

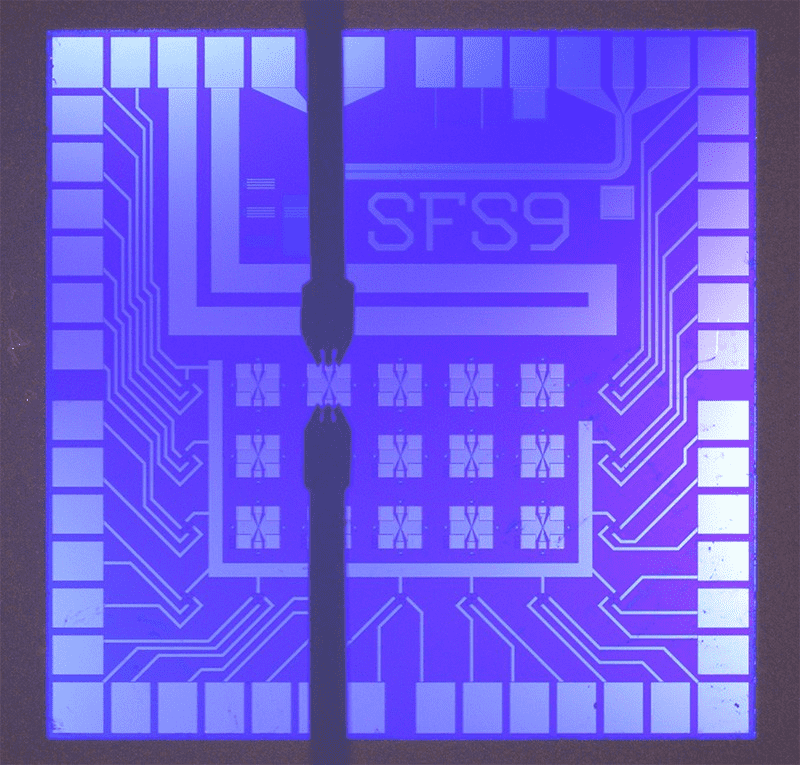

Besides the expeditious nature of these analogous resistors, they are efficient as well, which is considered the most important milestone in transforming digital neural networks. According to the senior author and professor of nuclear science, Ju Li, “The action potential in biological cells rises and falls with a timescale of milliseconds since the voltage difference of about 0.1 volts is constrained by the stability of water. Here we apply up to ten volts across a special solid glass film of nanoscale thickness that conducts protons, without permanently damaging it. “

He further stated, “And the stronger the field, the faster the ionic devices.” One of the standouts of this incredible development is that the researchers have deployed the use of high-tech glass, also known as “inorganic phosphosilicate glass (PSG)”, rather than the conventional means of using organic mediums for the neural networks. Hence, through the use of this efficient medium, the breakthrough of achieving a “nanosecond speed” target has been easily accomplished.

Accordingly, the lead author, Murat Onen, stated, “Once you have an analog processor, you will no longer be training networks everyone else is working on. You will be training networks with unprecedented complexities that no one else can afford to, and therefore vastly outperform them all. In other words, this is not a faster car, this is a spacecraft. “