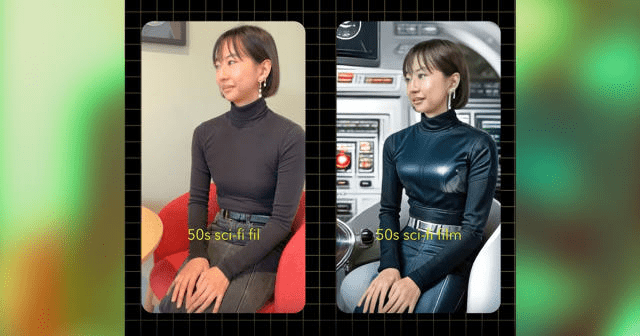

Snapchat filters are about to hit another level. The popular image-based messaging app has unveiled its upcoming AI model, intended to bring a trippy, augmented reality experience to its millions of users with tech that can transform footage from their smartphone cameras into pretty much whatever they want — so long as they’re okay with it looking more than a little wonky. As shown in an announcement demo, Snapchat’s new AI can transport its subjects into the world of a “50s sci-fi film” at the whim of a simple text prompt, and even updates their wardrobes to fit in.

In practice, the results look more like a jerky piece of stop motion than anything approaching a seamless video. But arguably, the real achievement here is that not only is this being rendered in real-time, but that it’s being generated on the smartphones themselves, rather than on a remote cloud server.

Snapchat considers these real-time, local generative AI capabilities a “milestone,” and says they were made possible by its team’s “breakthroughs to optimize faster, more performant GenAI techniques.”

The app makers could be onto something: getting power-hungry AI models to run on small, popular devices is something that tech companies have been scrambling to achieve — and there’s perhaps no better way to endear people to this lucrative new possibility than by using it to make them look cooler. Snapchat has been trying out AI features for at least a year now. In a rocky start, it released a chatbot called “My AI” last April, which pretty much immediately annoyed most of its users. Undeterred, it’s since released the option to send entirely AI-generated snaps for paid users, and also released a feature for AI-generated selfies called “Dreams.”

Taking those capabilities and applying them to video is a logical but steep progression, and doing it in real-time is even more of a bold leap. But the results are currently less impressive than what’s possible with still images, which is unsurprising. Coherent video generation is something that AI models continue to struggle with, even without time constraints.

There’s a lot of experimenting to be done, and Snapchat wants users to be part of the process. It will be releasing a new version of its Lens Studio that lets creators make AR Lenses — essentially filters — and even build their own, tailor-made AI models to ‘supercharge’ AR creation.

Regular users, meanwhile, will get a taste of these AI-powered AR features through Lenses in the coming months, according to TechCrunch. So prepare for a bunch of really, really weird videos — and perhaps a surge in what’s possible with generative AI on your smartphones.