The simple hand movements that we all take for granted are quite a feat to achieve in the field of robotics. A robotic hand has been imparted the ability to give thumbs up, scoop objects and pinch. These motions can be carried out by making use of brainwaves of the user. Yes, the user thinks of a gesture and the robotic hand complies.

Jan Scheuermann who is 55 years old and from Pittsburgh was paralyzed from neck back in 2003 owing to the neurodegenerative condition. Over the last two years, she has managed to high-five the researchers, eat chocolate by herself and can now even give a thumbs-up by simply thinking of it. She signed up for the study being conducted by the University of Pittsburgh in 2012.

In the time after she signed up, she had two quarter-inch electrode grids installed with each electrode consisting of 96 contact points that were responsible for covering her brain’s area controlling her right hand and arm movements. The electrode grids were then connected to a computer, thus resulting in the creation of a machine-brain interface with the contact points being able to pick up pulses of electricity that are fired between the neurons.

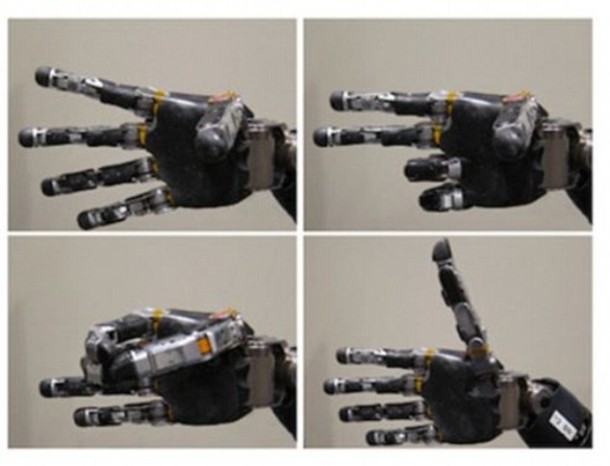

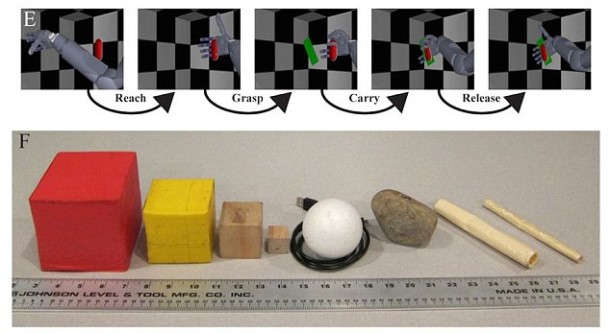

The picked up pulses are then decoded via computer algorithms and help in identification of patterns that are linked to a specific arm movement. A VR program was used to calibrate Ms. Scheuermann’s mind with the robotic arm. This training allowed her to make the robotic arm reach for objects and move in different directions as well. She was able to flex and rotate the wrist by merely thinking about the action. The latest model is being called 10D while the previous one was known as 7D. 10D gives extra dimensions which are the result of 4 types of movements related to the robotic hand; finger abduction, thumb extension, scoop and pinch.

The developers hope that their finding could be used to build upon a better future for disabled persons. Dr. Jennifer Collinger, co-Author of the study, said, “10D control allowed Jan to interact with objects in different ways, just as people use their hands to pick up objects depending on their shapes and what they intend to do with them. We hope to repeat this level of control with additional participants and to make the system more robust, so that people who might benefit from it will one day be able to use brain-machine interfaces in daily life. We also plan to study whether the incorporation of sensory feedback, such as the touch and feel of an object, can improve neuroprosthetic control.”

Ms Scheuermann happily remarked, “This has been a fantastic, thrilling, wild ride, and I am so glad I’ve done this. This study has enriched my life, given me new friends and co-workers, helped me contribute to research and taken my breath away. For the rest of my life, I will thank God every day for getting to be part of this team.”

woow.. this is an amazing innovation