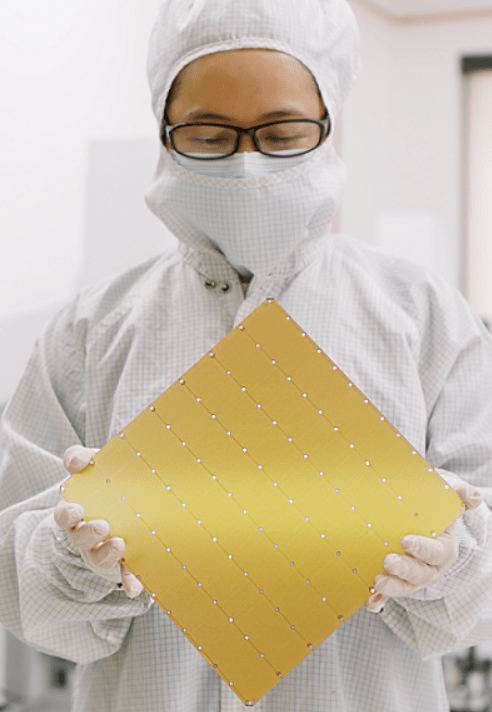

Cerebras Systems, a company based in California, has unveiled its latest advancement in artificial intelligence (AI) chip technology which they’ve named as the Wafer Scale Engine 3 (WSE-3). This extraordinary chip boasts an impressive four trillion transistors, marking a substantial leap forward in performance.

Surpassing its predecessor, the WSE-2, which previously held the record for the fastest chip, the WSE-3 now offers double the speed. Notably, this new chip empowers systems to fine-tune models containing a staggering 70 billion parameters in just one day, a capability highlighted in a recent press release.

Models such as GPT have seized the spotlight in the swiftly advancing domain of AI technology, thanks to their remarkable capabilities. Nevertheless, the industry widely recognizes that AI models are still in their infancy and necessitate further development to bring about substantial disruptions in the market. Consequently, the demand for training on larger datasets is increasing, necessitating even more advanced infrastructure capabilities.

Chip giant Nvidia has dominated the market with its powerful offerings, such as the H200, equipped with 80 billion transistors for AI model training. Yet, Cerebras aims to outperform Nvidia’s offering by a significant margin with the WSE-3, boasting 57 times the performance.

The CS-3, Cerebras’ AI supercomputer utilizing the WSE-3, employs a 5 nm architecture and features 900,000 cores optimized for AI data processing. With 44GB of on-chip SRAM, it can store a staggering 24 trillion parameters in a single logical memory space, simplifying training workflows and enhancing developer productivity.

The CS-3’s external memory can scale up to 1.2 petabytes, enabling training of models ten times larger than GPT-4 or Gemini. Cerebras claims that training a one trillion parameter model on the CS-3 is as straightforward as training a one billion parameter model on GPU chips.

The CS-3 is adaptable to enterprise or hyperscale needs, offering configurations that can fine-tune models with 70 billion daily parameters or train the 70 billion parameter Llama model from scratch in just a day when set up in a 2048 system configuration.

Cerebras plans to deploy the WSE-3 at esteemed institutions like the Argonne National Laboratory and the Mayo Clinic to enhance research capabilities. Additionally, in partnership with G42, Cerebras is constructing the Condor Galaxy-3 (CG-3), set to be one of the world’s largest AI supercomputers. Comprising 64 CS-3 units, the CG-3 will deliver eight exaFLOPS of AI computing power.

Kiril Evtimov, Group CTO of G42, emphasized the significance of the strategic partnership with Cerebras in driving innovation and accelerating the AI revolution globally.

“Our strategic partnership with Cerebras has been instrumental in propelling innovation at G42, and will contribute to the acceleration of the AI revolution on a global scale,” added Kiril Evtimov.