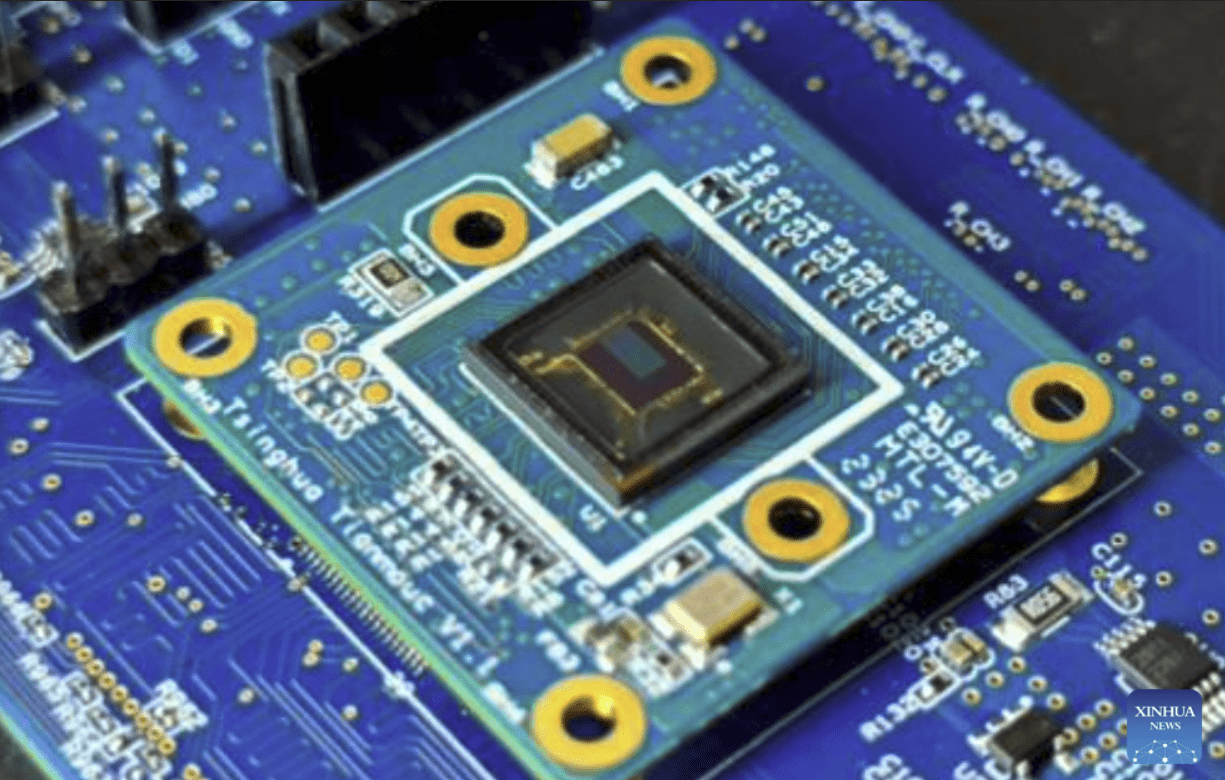

Scientists from Tsinghua University in China have unveiled the Tianmouc, the world’s premier brain-inspired vision chip, capable of an astounding 10,000 frames per second. This groundbreaking achievement not only surmounts the performance hurdles of conventional visual sensing methods but also adeptly navigates diverse extreme scenarios, ensuring system stability and safety.

The brainchild of the Center for Brain-Inspired Computing Research (CBICR) at Tsinghua University, the Tianmouc chip heralds a new era by imparting human eye-like perception to machines while concurrently slashing bandwidth usage by a staggering 90%. The chip embodies a novel complementary sensing paradigm, inspired by the human visual system, which dissects visual data into elemental representations and then amalgamates them into two distinct pathways: one for precise cognition and the other for rapid response.

To materialize this paradigm, researchers engineered Tianmouc, integrating a hybrid pixel array and a parallel-and-heterogeneous readout architecture. Leveraging this innovation, the team developed high-performance software and algorithms, validated on a vehicle-mounted perception platform operating in open environments. Even in extreme scenarios, the system exhibited low-latency, high-performance real-time perception, showcasing its potential for intelligent unmanned systems.

Esteemed scientists laud Tianmouc as a monumental breakthrough in visual sensing chip technology, providing crucial support for the intelligent revolution and propelling advancements in autonomous driving and embodied intelligence applications. Combined with CBICR’s existing technological prowess in brain-inspired computing chips like “Tianjic,” Tianmouc enriches the brain-inspired intelligence ecosystem, hastening progress towards artificial general intelligence.

“We demonstrate the integration of a Tianmouc chip into an autonomous driving system, showcasing its abilities to enable accurate, fast and robust perception, even in challenging corner cases on open roads,” said researchers.

Researchers envision Tianmouc’s integration into autonomous driving systems, enabling precise, rapid, and robust perception, even in challenging conditions on open roads. The chip’s primitive-based complementary sensing paradigm circumvents inherent limitations in developing vision systems for real-world applications, offering a refreshing departure from traditional machine vision strategies.

“This is a perception chip, not a computational one, based on our original technical route,” said project leader Shi Luping, a professor with the university’s Centre for Brain-Inspired Computing Research, according to SCMP.

“Firstly, it balances speed and dynamic performance in vision chips and introduces a novel computational method that diverges from existing machine vision strategies. Secondly, this approach mimics the human visual system’s dual pathway, enabling decision-making without complete clarity.”

Project leader Shi Luping elucidates Tianmouc’s distinguishing features, emphasizing its focus on perception rather than computation. By mimicking the human visual system’s dual pathway, the chip facilitates decision-making in ambiguous scenarios. With its promise to revolutionize autonomous driving and defense sectors and spawn novel applications, Tianmouc heralds a paradigm shift in visual sensing technology, promising a future where machines perceive the world akin to humans.