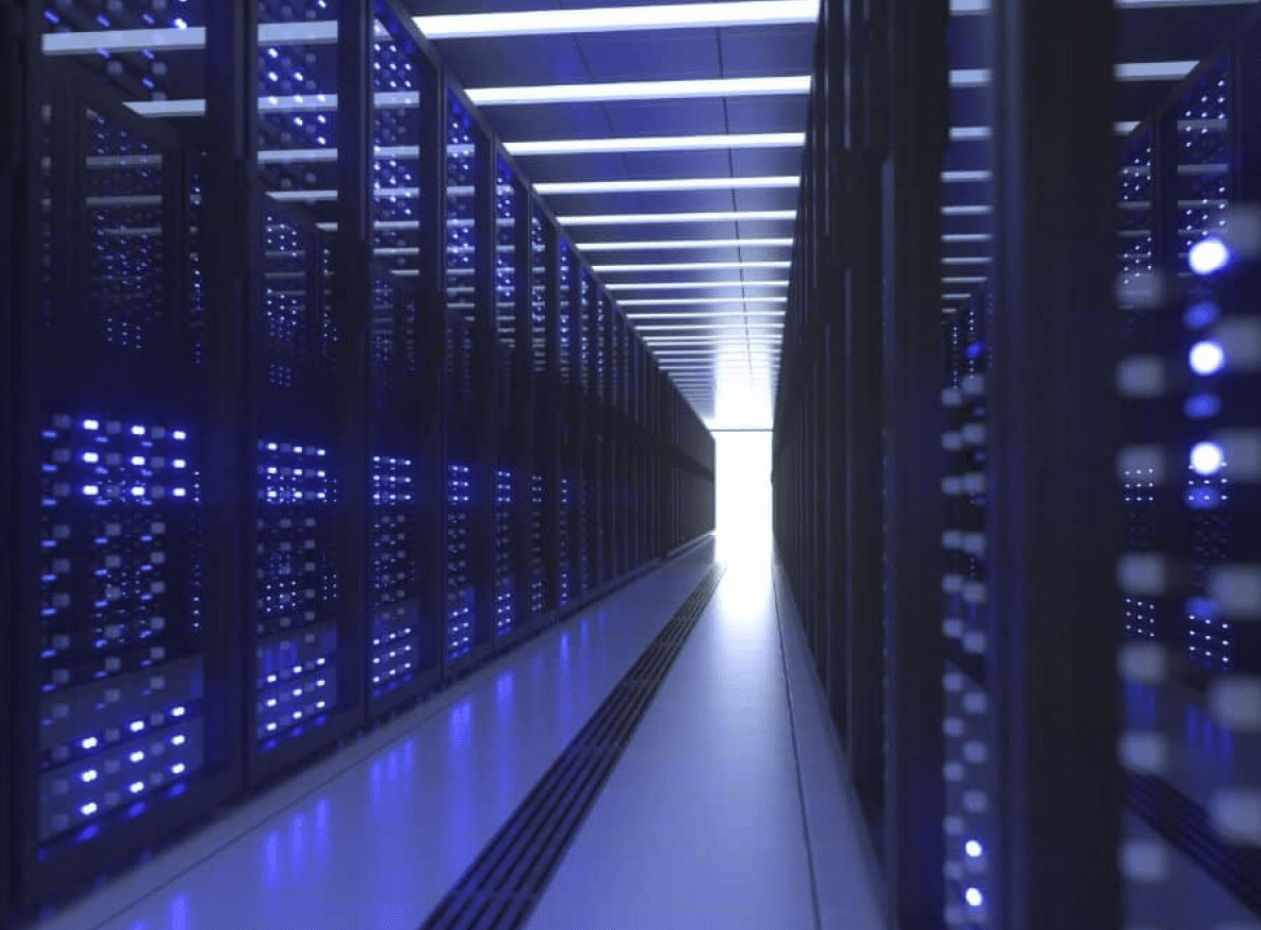

The swift expansion of generative AI is putting substantial pressure on the US power grid and water supplies, mainly due to the energy and cooling requirements of data centers that facilitate AI development. As AI technologies progress, new data centers are being established across the country to deliver the considerable computing power necessary for training and operating intricate machine learning models. These facilities are heavy consumers of electricity and water, leading to worries about whether the grid can meet rising demand.

The environmental implications of AI remain a concern unless sustainability is integrated into its design, even though AI holds the potential to promote sustainability in various sectors. To tackle these challenges, efforts are underway to improve energy efficiency and invest in renewable energy sources.

For instance, prominent tech companies such as Google, Microsoft, Oracle, and Amazon are increasingly utilizing Arm’s low-power processors, which can cut data center energy usage by as much as 15 percent. Additionally, it has been reported that generative AI models operate on Nvidia’s Grace Blackwell AI chip, which employs Arm-based CPUs that consume 25 times less power than earlier models.

“If we don’t start thinking about this power problem differently now, we’re never going to see this dream we have,” Dipti Vachani, head of automotive at Arm, told CNBC. The chip company’s low-power processors are being used more and more by huge companies like Google, Microsoft, Oracle and Amazon because they can help to reduce power use by up to 15% in data centers.

However, there is disagreement over these solutions’ effectiveness. An AI image can take as much energy to create as a fully charged smartphone, and a single ChatGPT query uses almost ten times as much energy as a Google search. The negative effects on the environment are brought to light by recent reports: despite claims of improved efficiency, Google’s greenhouse gas emissions increased by almost 50% between 2019 and 2023, which was partially caused by the energy consumption of data centers. Similarly, Microsoft’s emissions increased by nearly thirty percent between 2020 and 2024, primarily as a result of data center energy consumption.

“Saving every last bit of power is going to be a fundamentally different design than when you’re trying to maximize the performance,” Vachani said.

Although there are continuous endeavors to improve AI’s sustainability and energy efficiency, the technology’s present course presents serious worries about the effects it will have on the environment, particularly with regard to data centers’ usage of water and power.