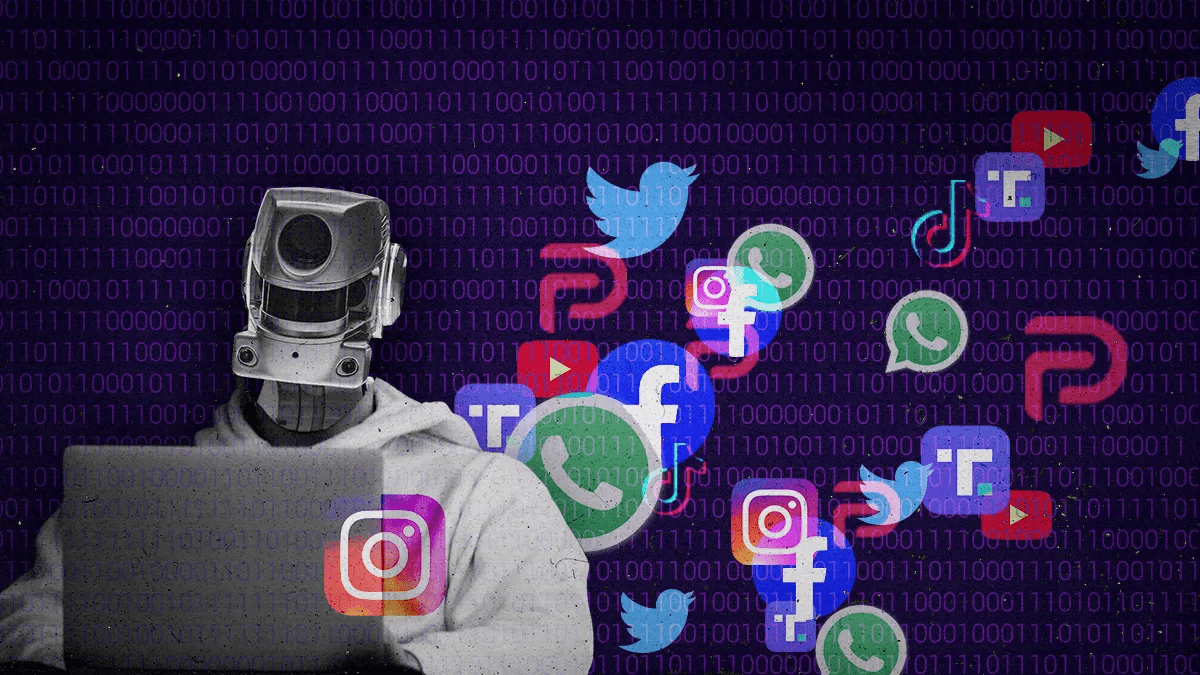

The Pentagon is exploring the use of generative AI to craft sophisticated fake personas on the internet, raising concerns about the impact on the already complex landscape of online misinformation.

According to a report by The Intercept, the Joint Special Operations Command (JSOC), a clandestine counterterrorism division of the U.S. Department of Defense (DoD), is seeking AI technologies to create convincing online identities for intelligence and surveillance purposes.

This initiative, while geared toward improving digital intelligence operations, contradicts the U.S. government’s persistent warnings about the dangers of deepfakes and AI-generated misinformation. Experts fear the Pentagon’s interest in such tools could accelerate the global adoption of AI-driven deception, making it harder to distinguish fact from fiction in an already muddled information ecosystem.

In a procurement document, JSOC detailed its interest in AI capable of generating convincing online personas, complete with realistic facial and background imagery, video, and audio layers. These fake personas would be used by Special Operations Forces (SOF) to infiltrate online forums and social media platforms to gather intelligence.

The program’s goal is to allow SOF agents to create AI-generated identities indistinguishable from real people, giving them a digital advantage for intelligence-gathering operations. While intelligence agencies have long monitored online platforms, the incorporation of AI would take this surveillance to a new level, potentially enhancing their ability to manipulate and influence discussions undetected.

The use of AI-generated content has long been a contentious issue. As deepfake technology becomes more sophisticated, there are increasing concerns that it could exacerbate the spread of misinformation. The Pentagon’s interest in deepfakes for digital surveillance, which first surfaced in 2023 through a procurement document from the DoD’s Special Operations Command (SOCOM), suggests that U.S. military agencies are considering large-scale AI use to enhance influence operations and other intelligence efforts.

This approach has sparked warnings from experts like Heidy Khlaaf, chief AI scientist at the AI Now Institute, who cautions that the Pentagon’s embrace of AI-generated deception will likely embolden other nations to follow suit. “This will only embolden other militaries or adversaries to do the same,” Khlaaf said, emphasizing that it could lead to a world where distinguishing truth from fiction becomes increasingly challenging, with serious geopolitical implications.

The U.S. government’s interest in using AI for intelligence and influence campaigns may set a troubling precedent, leading other nations to develop similar capabilities for surveillance or even disinformation efforts. Experts fear that the deployment of such technologies could further erode trust in online information, creating an environment where deception is rampant and truth becomes more elusive than ever.