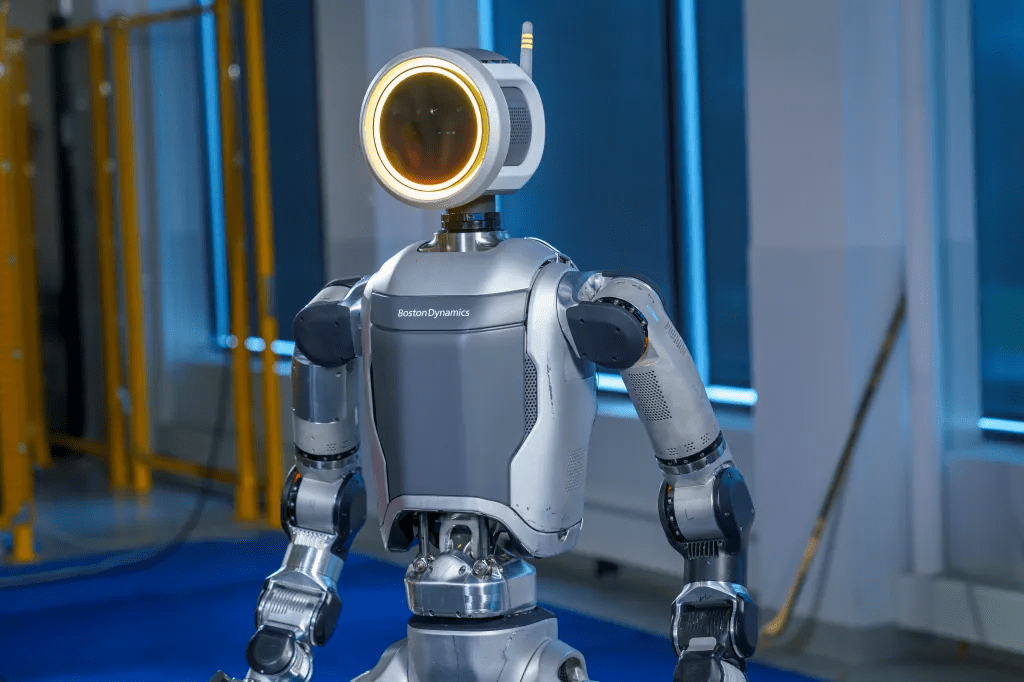

The Atlas robot from Boston Dynamics has taken a significant leap forward, now performing complex, autonomous tasks with a unique twist. This all-electric humanoid uses an advanced machine learning (ML) vision model to detect and understand its surroundings, generating all movements autonomously and in real-time. Unlike robots requiring pre-programmed commands or human guidance, Atlas responds intuitively to environmental changes—whether avoiding obstacles or adjusting to moving objects—using a blend of vision, force, and proprioceptive sensors.

In a newly released video, viewers can watch Atlas navigate and execute a range of pick-up and put-down tasks completely independently. The machine’s bizarrely human-like agility is both astonishing and, frankly, a bit unnerving. With swivel joints that enable its torso, neck, and limbs to move in ways reminiscent of horror movie contortions, Atlas is as captivating as it is eerie. Boston Dynamics’ video, landing just before Halloween, has spooked viewers and drawn comparisons to scenes from The Exorcist—particularly when Atlas twists and maneuvers with uncanny smoothness.

This humanoid’s design, however, is more than a party trick; its complex movements serve a purpose. Atlas’ ability to adapt autonomously suggests a future where humanoid robots can undertake almost any physical task humans perform today. By continuously refining its vision and decision-making through machine learning and physical testing, Atlas could redefine labor as we know it.

While Boston Dynamics has historically emphasized research over commercial production, Atlas may be edging closer to real-world applications. With companies like Tesla, Agility Robotics, and Figure accelerating their efforts to produce humanoids at scale, Atlas might soon graduate from research lab to industry, potentially transforming productivity worldwide and sparking broad societal shifts.