In a traffic accident, human beings make decisions based in the heat of the moment. They may swerve sideways and hit the incoming truck to save the kid in front of them or not just resort to apply brakes and hope the child is saved because steering sideways will result in a much bigger disaster. Many of these decisions cannot be rationalized by reason as we are human beings and not calculating beasts like machines. We do what comes naturally to us even though we break many ethical dilemmas.

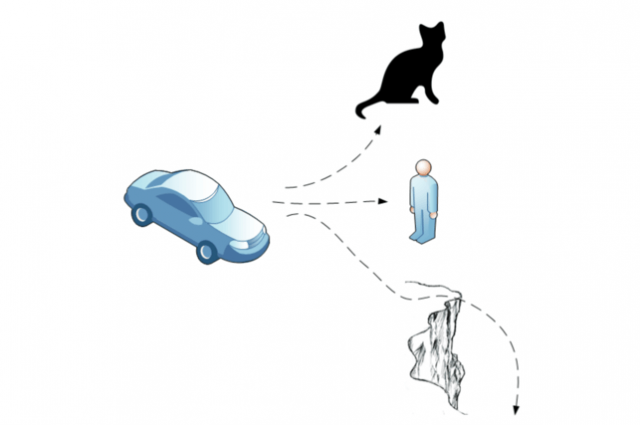

But, once completely autonomous cars hit the roads, they will be programmed to handle such ethical dilemmas in a calculating manner and they will have to be made logically correct. They will calculate the number of possible deaths, both animal, and human and take an action accordingly. If the people inside the car are taken into account too, there might be a situation where the car takes evasive action to save more lives in the periphery and get you killed instead. Would you still want to ride that driverless car if it was programmed to do so?

This algorithmic ethical dilemma is one of the main talking points of the new self-driven cars. It is not a question of whether they will hit the road, but when they will hit the roads. They can already park our cars, change lanes, and even cruise at a set speed like Tesla’s Model S. It wouldn’t be far-fetched to assume that these cars will go completely autonomous in a few years. While statistics prove that these automatic cars will result in much safer road travel, the ethical dilemmas associated with them are quite complex to decide.

A recent study at the Toulouse University concluded that there is no exact right or wrong answer to these questions. Everybody’s life is precious and it is not an ethical dilemma to make the same mistake as humans make every day just because it is automated and all. But, as we enter into the era of intelligent machines that are connected to each other, they may start using cold logic to explain their actions and motives. It means that the sci-fi classics like Terminator and their understanding of the machine logic are all but set to become reality within a few years especially when it comes to cars. Cloud computing and high-speed connectivity means that all that data streaming from around the world will allow intelligent systems to do acts for the greater good even if that means killing 1 human for saving 3 dogs which we would never do. Electronics giant Intel claims that there will be over 152 million interconnected cars with driverless technology on the roads by 2020. They will stream 11 petabytes of data every year that can fill 40,000 250 GB hard disks. In other words, Intel says that this system of cars can act almost as powerful as a single human brain and arrive at critical decisions with ease.

In the Will Smith starred I, Robot, we are also introduced with the three laws concerning robots. All of them arrive at the same conclusion that robot should do human’s bidding as long as it doesn’t involve putting other human’s in jeopardy. Also, they aren’t allowed to challenge humanity’s authority and never get involved in taking other human’s lives. But, the question is that in the above-mentioned ethical dilemma cases, we are actually giving it a choice to save one human over another. Isn’t it a violation of the robot laws since it is in control and would an intelligent robot stop at just deciding about human lives after this?

These are just a few questions that trouble the current technological improvements in self-driven technology. Soon enough, governments will also be involved and put restrictions on the technology because of rising incidents on the roads. This is going to be interesting too, seeing Skynet develop into a beast right before our eyes!