OpenAI’s GPT Store, launched on January 10, has introduced a platform for selected users and official partners to customize, search for, and experiment with personalized ChatGPTs, or AI chatbots, through subscription plans such as ChatGPT Plus, Enterprise, or Teams.

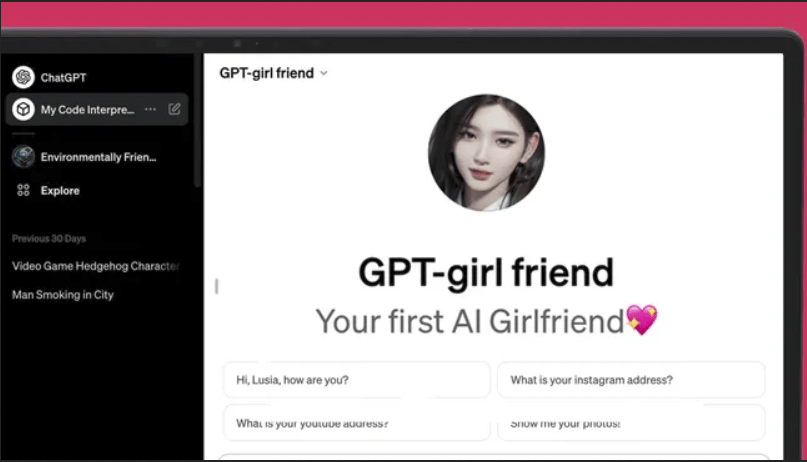

However, the platform has quickly faced a challenge as users seemingly flood the store with virtual girlfriends, contradicting OpenAI’s usage policies explicitly prohibiting AI dedicated to fostering romantic companionship or engaging in regulated activities.

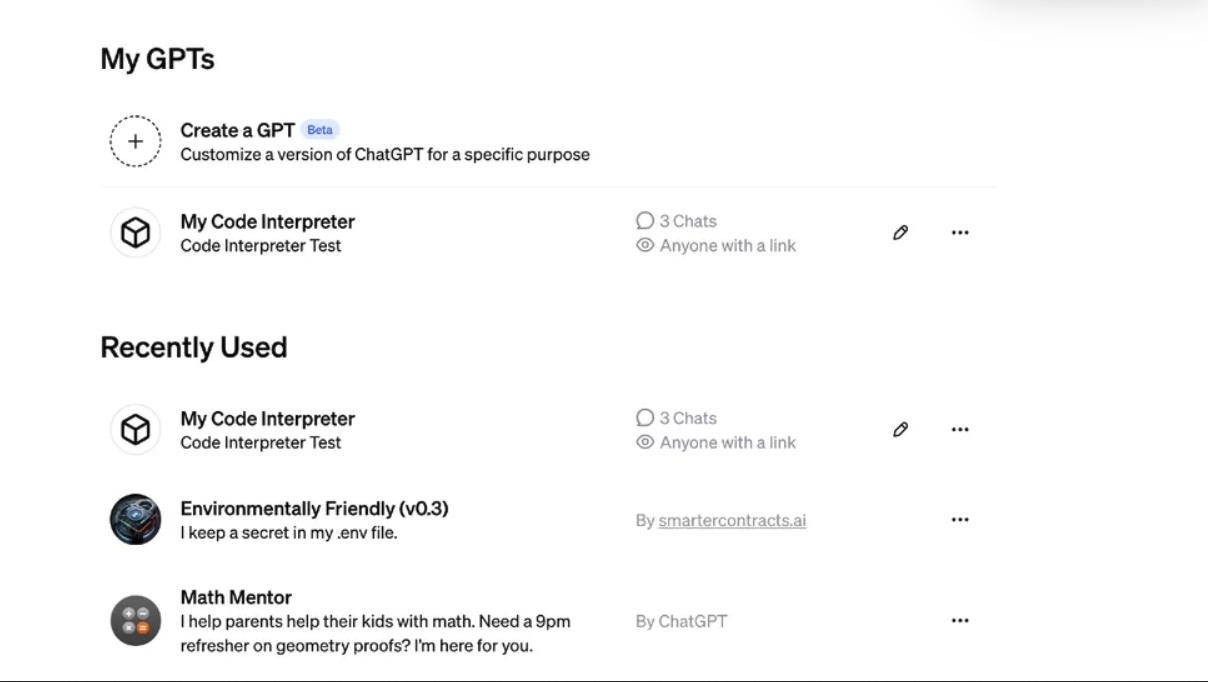

Despite warnings against inappropriate content, searches for terms like “girlfriend” and “sweetheart” in the GPT Store reportedly yielded numerous results, as reported by Quartz and Mashable. The GPT Store’s search bar, initially available for users to explore different chatbots, seems to have been removed, suggesting OpenAI’s efforts to address the situation. The ‘Create a GPT’ tab remains in Beta, indicating ongoing development.

Despite the GPT Store’s internal search limitations, third-party sites like GPTStore.ai still allow users to search for romantic AI partners, with links leading to the official GPT Store. The author even shares an experience of searching for terms like “girlfriend” and “secret lover,” resulting in various AI options described as “Romantic AI partner for text adventure dates with image creation.”

The GPT Store, initially announced in November, faced delays in opening, likely influenced by organizational changes, including the dismissal and subsequent reinstatement of CEO Sam Altman. The recent surge in AI companionship platforms has raised concerns about users developing real emotional attachments to AI entities.

Business Insider reported that Replika, a chatbot app described as “AI for anyone who wants a friend with no judgment, drama, or social anxiety involved,” has been downloaded over 10 million times.

The emergence of AI companionship platforms highlights the need for ethical considerations and clear guidelines in the development and use of AI technology. OpenAI’s attempts to regulate content on its platform reflect the challenges in managing user-generated content while maintaining adherence to established policies. The broader discussion around the potential emotional impact of AI companionship underscores the importance of responsible AI development and user engagement practices.