John Schulman, a co-founder of OpenAI, announced on Monday that he is leaving the Microsoft-backed company to join Anthropic, an AI startup funded by Amazon.

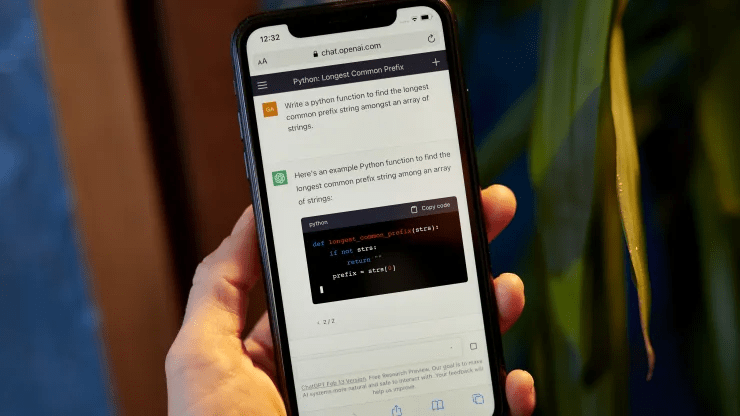

The decision by Schulman comes shortly after OpenAI dissolved its superalignment team, which was dedicated to ensuring human control over competent AI systems. Schulman was previously a co-leader of OpenAI’s post-training team, which was responsible for refining AI models used in the ChatGPT chatbot and developing a programming interface for third-party developers. His biography notes that he has been with OpenAI since earning his Ph.D. in computer science from UC Berkeley in 2016. In June, OpenAI announced Schulman would join a safety and security committee to advise the board.

In his social media post, Schulman expressed his desire to focus more intensely on AI alignment and return to hands-on technical work. He emphasized that his departure was not due to a lack of support from OpenAI’s leadership, who he said were committed to investing in AI alignment.

Notably, Jan Leike and Ilya Sutskever, leaders of the now-disbanded superalignment team, also left OpenAI this year. Leike joined Anthropic, while Sutskever is starting a new company, Safe Superintelligence Inc.

Since its inception in 2021 by former OpenAI staff, Anthropic has been in fierce competition with other tech giants like Amazon, Google, and Meta to develop leading generative AI models capable of producing human-like text. Leike expressed enthusiasm about working with Schulman again in a reply to his announcement.

Sam Altman, OpenAI’s co-founder and CEO, acknowledged Schulman’s contributions to the company’s early strategy in a post of his own. Schulman and others decided to leave OpenAI after Altman was temporarily ousted as CEO last November, a decision that led to protests from employees and the resignation of several board members. Altman was eventually reinstated, and OpenAI expanded its board.

Helen Toner, one of the resigning board members, mentioned in a podcast that Altman had provided the board with incorrect information about the company’s safety processes. An independent review by WilmerHale found that the board’s decision to oust Altman was not motivated by concerns about product safety.

Recently, Altman revealed that OpenAI has been collaborating with the US AI Safety Institute to provide early access to their next foundation model, reinforcing the company’s commitment to AI safety.

Moreover, he stated that OpenAI allocates 20% of its computing resources to safety initiatives.