Nvidia CEO Jensen Huang says his company’s AI chips are advancing faster than Moore’s Law, the decades-old rule that has historically guided computing innovation. After his keynote at CES in Las Vegas, Nvidia’s Huang spoke to TechCrunch and said that Nvidia’s systems are “progressing way faster than Moore’s Law.”

Moore’s Law, introduced by Intel co-founder Gordon Moore in 1965, predicted that transistor counts on chips would double approximately every year, resulting in exponential performance improvements. This law has been true for decades, but its pace has slowed recently. But Nvidia’s AI chip advances tell a different story. Nvidia’s latest super chip for data centers is 30 to 40 times faster for AI inference workloads than previous models, Huang said. This acceleration, he says, is due to parallel innovation across the whole technology stack: architecture, chips, systems, libraries, and algorithms.

The claims come as there are debates about whether AI progress is stalling. Nvidia’s AI chips are used by leading labs such as OpenAI and Google to help advance AI model capabilities. Huang also introduced three “AI scaling laws” driving progress: test time compute, pre training, post training. Nvidia’s chip innovations are making test time compute, an expensive phase where AI models ‘think’ during inference, more efficient, he said.

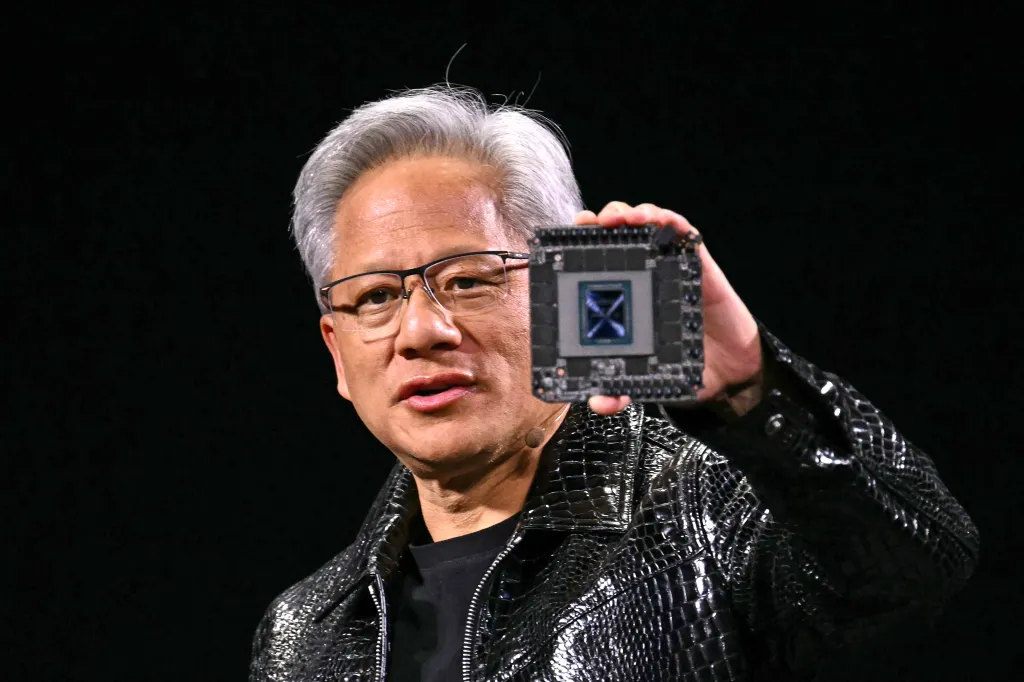

These advancements are exemplified by Nvidia’s latest chip, the GB200 NVL72, unveiled at CES. Huang demonstrated how this chip can dramatically cut costs for complex AI reasoning models, potentially making them more affordable. Huang says high initial expenses will be offset by lower prices as performance improves, thanks to more powerful computing.

Nvidia’s chips have improved 1,000 times over the past decade, more than Moore’s Law. Nvidia’s advancements in AI computing point to a promising future of both performance and affordability as AI models continue to evolve and require more sophisticated hardware.