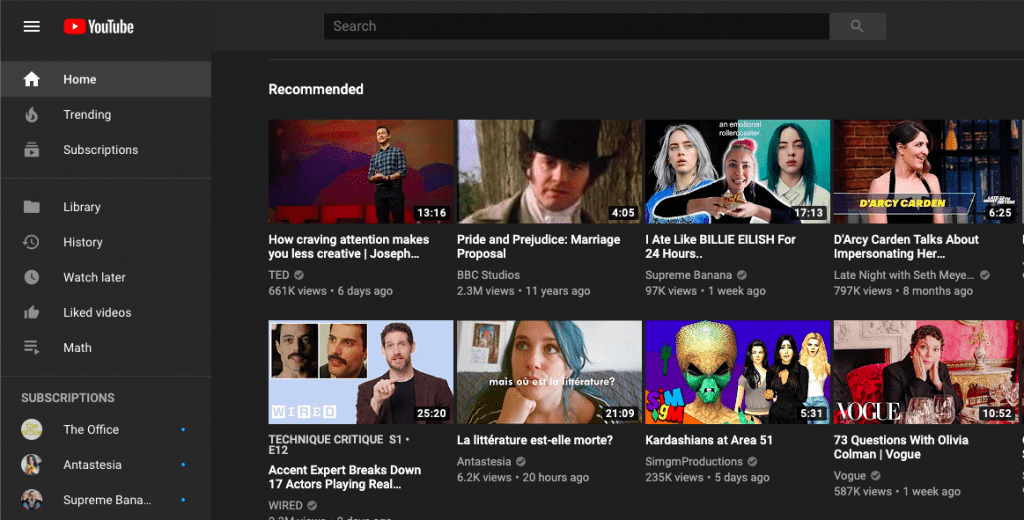

If you’ve been using YouTube for a while you may know that sometimes your home feed or your explore feed just isn’t recommending you stuff that you like. Either it’s something completely different from what you usually watch or its videos that serve some political purpose and agenda. Many have criticized YouTube’s recommendations and the AI algorithm behind it. Saying that they’re usually fed videos that promote hate speech, are usually eyecatching, and enrage people.

While some of the criticism may seem far-fetched, there is some truth to it. The coming up next part at the end of any video is just designed to get you to keep watching. It recommends videos that incite hate or create controversy. Google has been trying to allegedly fix the problem. They’ve expanded the number of human moderators and continued to demonetize and remove hateful content. However, a new study has come out that says that YouTube’s recommender is still broken.

The new research was published by Mozilla and it backs up the fact that YouTube’s AI continues to recommend content that serves to promote conflict and controversies in the users. The folks at TechCrunch said that it continues to “puff up piles of bottom-feeding/low-grade/divisive/disinforming content”. Such content is designed to grab your attention and it serves to feed the main goal of YouTube in general. That is to make money by getting more views.

The thing is that most content creators have figured out how the algorithm works so they continue to push videos out that appease that algorithm. A few years back YouTube faced severe backlash when lewd and violent videos started being shown to kids who used the YouTube Kids app. Which was a dedicated app for children. Mozilla’s study indicates that there is something systemic in the way that the recommendation AI works.

According to the study, the AI is still behaving badly despite Google’s claims that they had done some reforms to fix the problem. This just shows that most of Google’s claims were false and were done to quell the criticism. Mozilla’s approach to gathering data was pretty unique. They made use of a browser extension called RegretsReporter which basically allowed users to self-report YouTube videos they “regretted” watching. Around 71% of regrets came from videos that YouTube had recommended itself.

The study says that for Google to actually fix and get a handle on things they need “common sense transparency laws, better oversight, and consumer pressure”. This means that they need laws that make it mandatory for AI systems to be transparent. So the user knows how they work or at least the regulators do. The study also recommended protecting independent researchers so that they can continue to figure out such algorithmic systems through trial and error.