In an era where artificial intelligence is advancing at an unprecedented pace, we find ourselves perched on the edge of an extraordinary precipice – the realm of artificial general intelligence (AGI). AGI promises to imbue machines with the ability to think, feel, and reason like humans. Yet, in the midst of this AI revolution, a crucial question looms large: Can AGI, with all its potential, help address the paramount challenge of our times – climate change?

As the capabilities of AGI technology continue to expand, it holds immense potential for mitigating climate change. Present-day AI models can monitor environmental variables and predict natural disasters, offering valuable insights into climate-related challenges. However, an alarming concern arises when considering the environmental toll these energy-intensive AI systems exacted. The carbon footprint of data centers hosting these AI models is contingent on factors such as electricity consumption, water usage, and equipment replacement frequency.

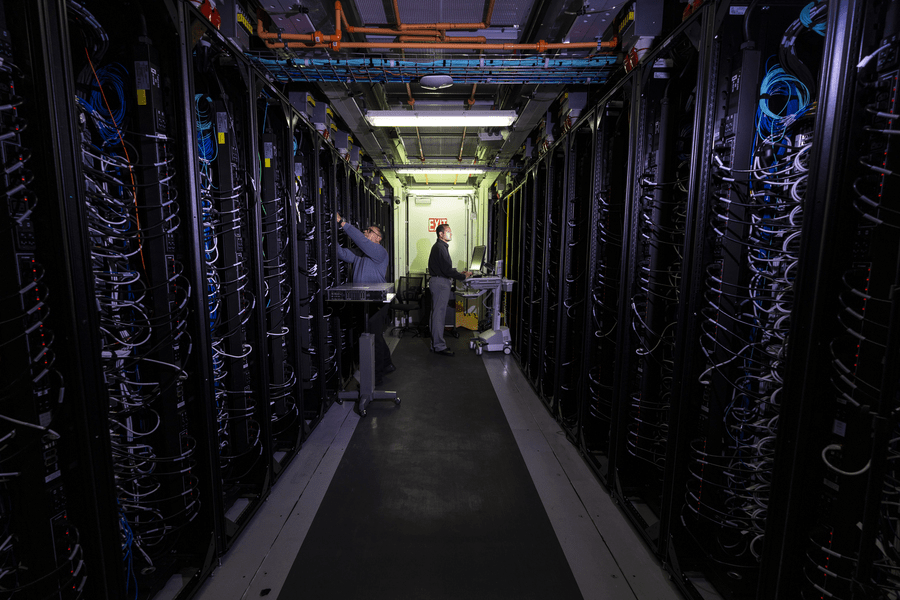

A report by Climatiq underscores the impact of cloud computing, revealing that it contributes to a staggering 2.5% to 3.7% of global greenhouse gas emissions, outstripping the emissions attributed to commercial aviation at 2.4%. This data is a few years old, and with the rapid advancement of AI, energy demands have likely surged even further. A case in point is the Lincoln Laboratory Supercomputing Center at MIT, which has witnessed a surge in AI program usage within their data centers. This surge prompted computer scientists to embark on a quest for more energy-efficient solutions.

Vijay Gadepally, a senior staff member at LLSC leading energy-aware research efforts, points out that “energy-aware computing is not really a research area.” Nevertheless, LLSC has taken the initiative to cap the power intake of graphics processing units (GPUs), the powerhouse behind energy-hungry AI models. For context, training OpenAI’s GPT-3 large language model devoured an astonishing 1,300 megawatt-hours of electricity – equivalent to a month’s consumption by a typical US household. By restraining GPU power consumption, researchers achieved a 12-15% reduction in energy use, albeit at the cost of slightly prolonged training times.

To further bolster energy efficiency, LLSC developed software that empowers data center administrators to set power limits system-wide or on a per-job basis. The outcome? Lower GPU temperatures, reduced strain on cooling systems, and a potential extension of hardware lifespan.

The International Energy Agency (IEA) underscores the paramount importance of energy efficiency within the digital technology sector for the coming decade. Curtailing emissions growth necessitates advancements in energy efficiency, widespread adoption of zero-carbon electricity, and the decarbonization of supply chains. The IEA also stresses the indispensability of robust climate policies to ensure that digital technologies reduce emissions rather than exacerbate them.

MIT researchers did not stop at optimizing energy use within data centers but also turned their attention to the energy-intensive AI model training phase. AI model training is notorious for consuming substantial data and energy, especially when exploring myriad configurations. To address this, the team developed a predictive model to assess configuration performance, allowing for the early termination of underperforming configurations. This innovative approach resulted in an impressive 80% reduction in energy consumption during model training.

As we ride the wave of AGI technology advancement, we must grapple with its substantial environmental footprint. Efforts to enhance energy efficiency within data centers and during AI model training represent pivotal steps towards ensuring that AGI technology not only empowers humanity but also plays a significant role in confronting the global challenge of climate change.

Striking a harmonious balance between technological innovation and environmental responsibility stands as an urgent imperative for our shared future.