When Bill Dally joined Nvidia’s research lab in 2009, it was a modest operation with a dozen researchers focusing mainly on ray tracing. Fast forward to today, and the lab has grown into a 400-strong team driving breakthroughs in AI, robotics, and simulation all while helping propel Nvidia into a $4 trillion tech giant.

Dally, now Nvidia’s chief scientist, first connected with the company in 2003 while at Stanford. His initial plan to take a sabbatical after stepping down as chair of Stanford’s computer science department was quickly derailed by Nvidia’s leadership. CEO Jensen Huang and then-lab head David Kirk made a “full-court press” to bring him onboard.

“It wound up being kind of a perfect fit for my interests and my talents,” Dally recalled. “I think everybody’s always searching for the place in life where they can make the biggest contribution to the world. And I think for me, it’s definitely Nvidia.”

When he took charge in 2009, Dally pushed for rapid expansion, moving beyond ray tracing into areas like circuit design and very large-scale integration (VLSI). Soon, the lab turned its attention to AI-powered GPUs. As early as 2010, the team recognized the transformative potential of AI.

“We said this is amazing, this is gonna completely change the world,” Dally said. “We have to start doubling down on this… long before it was clearly relevant.”

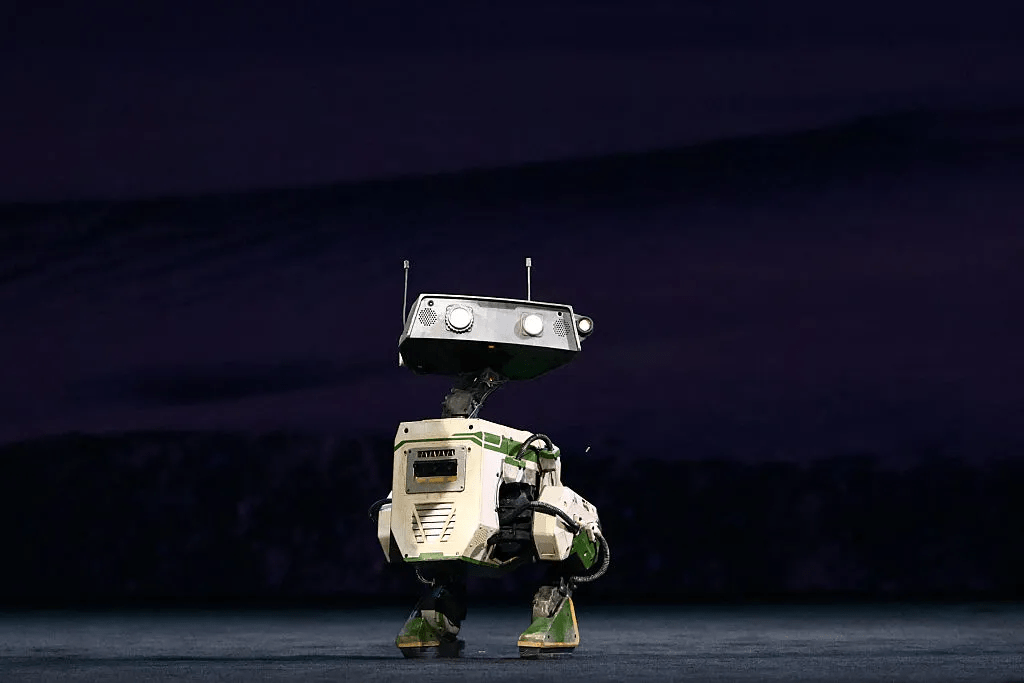

With Nvidia now dominating the AI GPU market, the lab is pursuing its next big target: physical AI and robotics.

“Eventually robots are going to be a huge player in the world and we want to basically be making the brains of all the robots,” Dally said.

That mission is shared by Sanja Fidler, Nvidia’s vice president of AI research, who joined in 2018. Already working on robot simulation models at the time, she was personally recruited by Huang.

“Jensen told me, come work with me, not with us, not for us,” Fidler said. “It’s just such a great topic fit and at the same time was also such a great culture fit.”

Fidler established a Toronto-based lab focused on Omniverse, Nvidia’s platform for simulating physical AI. A major early challenge was acquiring and processing vast amounts of 3D data. Her team invested in differentiable rendering enabling AI to reverse the traditional graphics pipeline, turning 2D images or video into 3D models.

The lab’s first milestone, GANverse3D, launched in 2021, converting images into 3D models. By 2022, Nvidia introduced its Neural Reconstruction Engine to handle video, using data from robots and self-driving cars. These technologies now underpin the company’s Cosmos world AI models, unveiled at CES earlier this year.

The focus now is on speed. “The robot doesn’t need to watch the world in the same time, in the same way as the world works,” Fidler explained. “It can watch it like 100x faster… they’re going to be tremendously useful for robotic or physical AI applications.”

At SIGGRAPH 2025, Nvidia announced a new generation of world AI models for creating synthetic training data, along with fresh libraries and infrastructure for robotics developers.

Still, both Dally and Fidler caution that humanoid robots in everyday life are years away. “We’re making huge progress and I think AI has really been the enabler here,” Dally said. “Starting with visual AI for perception, then generative AI for planning and manipulation… as we solve each problem and data grows, these robots are going to grow.”