During the last few years image recognition has come a long way and Google has probably been the one doing a lot more in this field than anybody else; it has brought some notable advances for the users. Try searching through your own Google Photos to see how far we have come. But recognizing objects and basic scenes is only the first step.

In September, Google using the currently popular deep learning technology, showed its approach could not just recognize and name images of single objects in pictures, but can also classify multiple objects from a single picture.

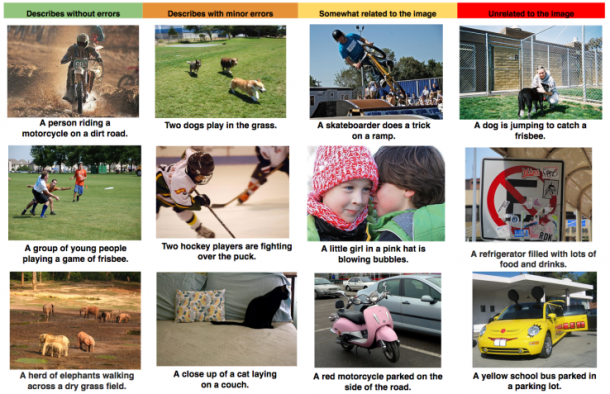

Once that is achieved, one can try to create a full natural language description of the image, which is the current goal of Google. According to a new Google research paper, the company recently developed an artificially intelligent system in such a way that it can teach itself how to describe a photograph with high accuracy.

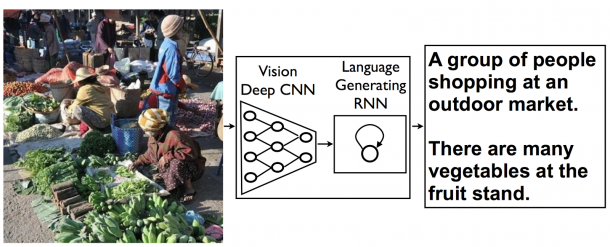

The typical way of approaching such recognition training is that a computer is fed pictures, and vision algorithms do their job and natural language processing is used to create a description for the specified item. That sounded quite reasonable but now as the technology evolves, scientists came up with a new approach, and instead of the typical approach they merged ‘recent computer vision and language models into a single jointly trained system, taking an image and directly producing a human readable sequence of words to describe it.’ This worked out quite well in machine translation with the help of a combination of two recurrent neural networks, Google said. The captioning system is a little different but basically uses the same approach.

This does not mean that Google’s approach is perfect. BLEU score, the score used to compare Machine Translations’ efficiency with Humans’, marked these captions somewhere between 27 and 59 points, whereas humans tend to score around 69 points. Still, this is quite a big success and a step forward from those approaches where score does not go above 25 points. Pretty cool, isn’t it?