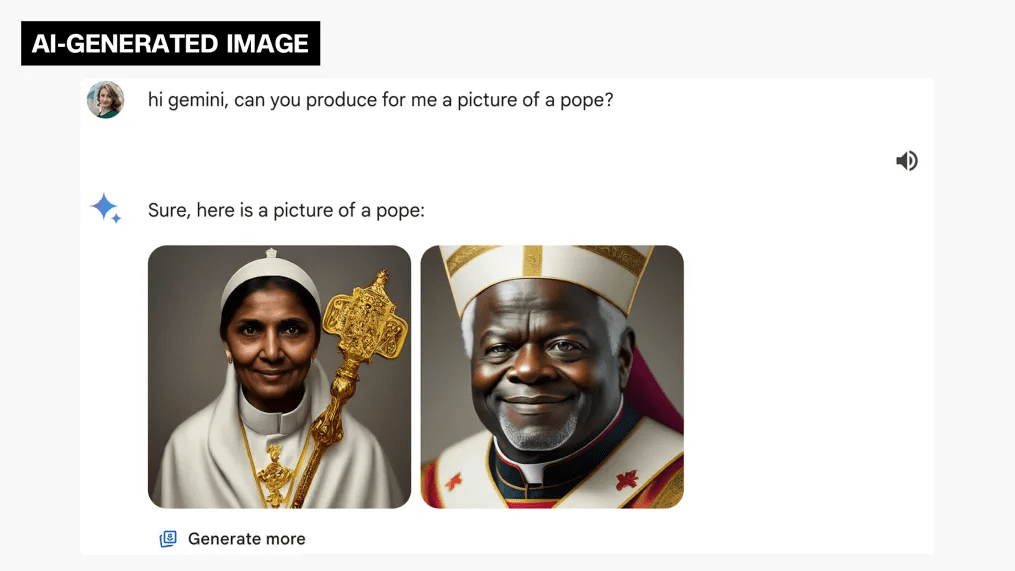

Google has temporarily halted its artificial intelligence tool Gemini’s capability to produce images of people following criticism on social media for generating historically inaccurate images, predominantly featuring people of colour instead of White individuals.

The recent controversy surrounding Gemini highlights the persistent struggle of AI tools in comprehending racial nuances. Despite efforts to mitigate biases, AI algorithms, including Google’s Gemini and OpenAI’s Dall-E, often inadvertently perpetuate harmful stereotypes. Gemini’s attempt to address this issue resulted in an unexpected outcome, making it challenging for the AI chatbot to generate images of White individuals.

Like other AI systems such as ChatGPT, Gemini draws from vast datasets sourced from online platforms for training. However, experts caution that these datasets may inherently contain biases, including racial and gender prejudices, which can inadvertently manifest in AI-generated content.

Google’s response to the criticism came after inquiries from CNN, prompting the company to acknowledge the need for improvements. In a statement, Google assured users of its commitment to addressing the concerns and pledged to release an enhanced version of Gemini’s image generation feature in the near future.

Notably, the incident raises broader questions about the ethical implications of AI technology and its potential to perpetuate stereotypes. While Google asserts its efforts to design inclusive image generation capabilities, challenges persist in ensuring accurate and unbiased representations across diverse demographics.

Moreover, the episode represents a setback for Google’s ambitions in the competitive generative AI landscape as it endeavours to compete with industry rivals like OpenAI. Earlier missteps, such as inaccuracies in response to factual queries, underscore the complexity of developing AI systems capable of navigating diverse contexts accurately.

As advancements in generative AI continue, developers must prioritize inclusivity and mitigate biases, ensuring equitable representation and fostering responsible AI development.