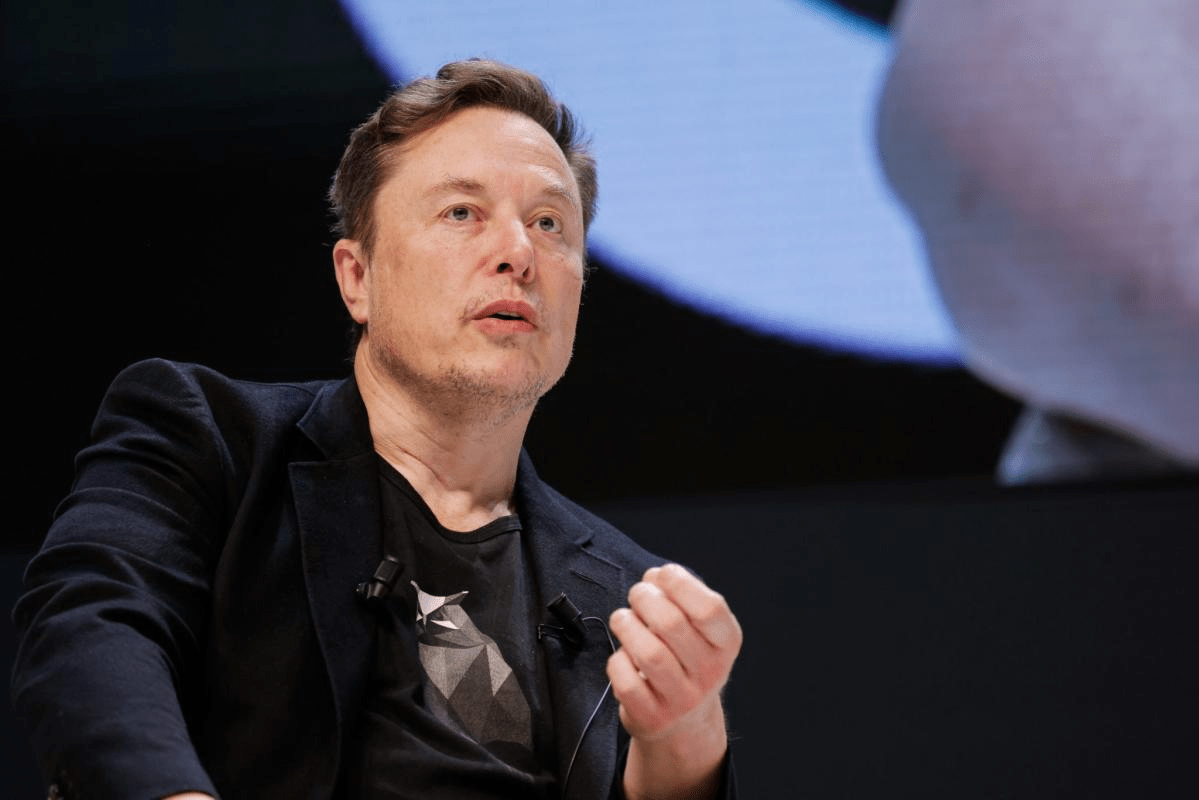

Elon Musk does it again, proving ambition and unveiling Colossus, the world’s largest Nvidia GPU supercomputer. All set in 122 days to show the speed and scale at which this man—Musk—shows his presence and commitment to lead AI development.

Colossus, meant to power xAI’s large language model (LLM) Grok, is installed in a new data center located in Memphis, Tennessee; it incorporates 100,000 Nvidia Hopper H100 processors. Currently, this supercomputer represents the most powerful AI training system across the globe. Musk’s startup xAI, founded just last year, plans to challenge OpenAI’s upcoming GPT-4 with Grok, currently available to the paying subscribers on Musk’s X social media platform. The same experts feel that eventually, Grok will be the superbrain for Telsa’s humanoid robot Optimus—a project that Musk predicts will bring in $1 trillion annually. The supercomputer’s rapid construction and impressive specifications signal Musk’s determination to dominate the AI landscape. He plans to double Colossus’s capacity within months, adding 50,000 of Nvidia’s next-generation H200 processors, further accelerating xAI’s advancements. Musk’s partnership with Nvidia is crucial, as he pledged to spend up to $4 billion on AI hardware this year.

Despite the excitement, Colossus has raised concerns in Memphis. City officials are worried about the strain on resources, as the supercomputer requires massive amounts of water and electricity for cooling and operation. Nevertheless, Musk’s philosophy of speed and ambition drives his decision-making. In a conversation with podcaster Lex Fridman, Musk emphasized the importance of speeding up processes, believing that anything can be done faster than initially thought.

As xAI continues its meteoric rise, Musk’s strategic moves have placed him in a strong position in the race to develop artificial general intelligence, and Colossus is a testament to his ability to execute large-scale projects swiftly and effectively.