Chinese computer scientists have significantly advanced supercomputing capabilities, achieving a nearly tenfold performance boost over Nvidia-powered US systems using domestically developed GPUs.

According to a peer-reviewed study, researchers attributed their success to advanced software optimization techniques that enhanced the efficiency of Chinese-designed graphics processing units (GPUs). These optimizations enabled their system to outperform US supercomputers in specific scientific tasks.

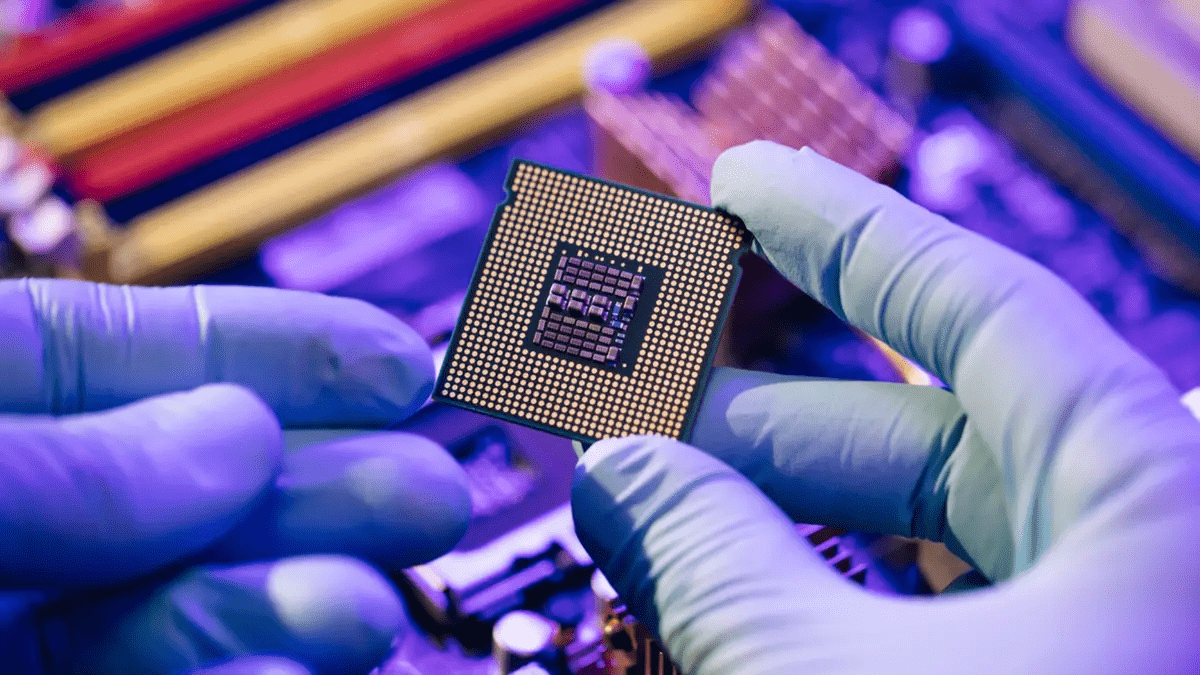

However, while software advancements have temporarily bridged the performance gap, experts warn that hardware limitations remain a critical obstacle. This development aligns with Beijing’s broader strategy to reduce dependence on Western semiconductor technology, particularly as US sanctions continue to restrict China’s access to high-end chips like Nvidia’s A100 and H100.

Scientific research requiring vast computational power, such as flood forecasting and urban waterlogging analysis, has long relied on advanced GPUs, which China has struggled to access due to US export controls. Additionally, the restriction of Nvidia’s CUDA software ecosystem on non-Nvidia hardware has further hindered independent algorithm development.

To overcome these barriers, Professor Nan Tongchao from Hohai University’s State Key Laboratory of Hydrology-Water Resources and Hydraulic Engineering led a research initiative exploring a “multi-node, multi-GPU” parallel computing model using domestic processors and GPUs.

Their findings emphasized the importance of efficient data transfer and task coordination in parallel computing. By refining data exchanges at the software level, Nan’s team significantly minimized performance losses caused by domestic hardware limitations.

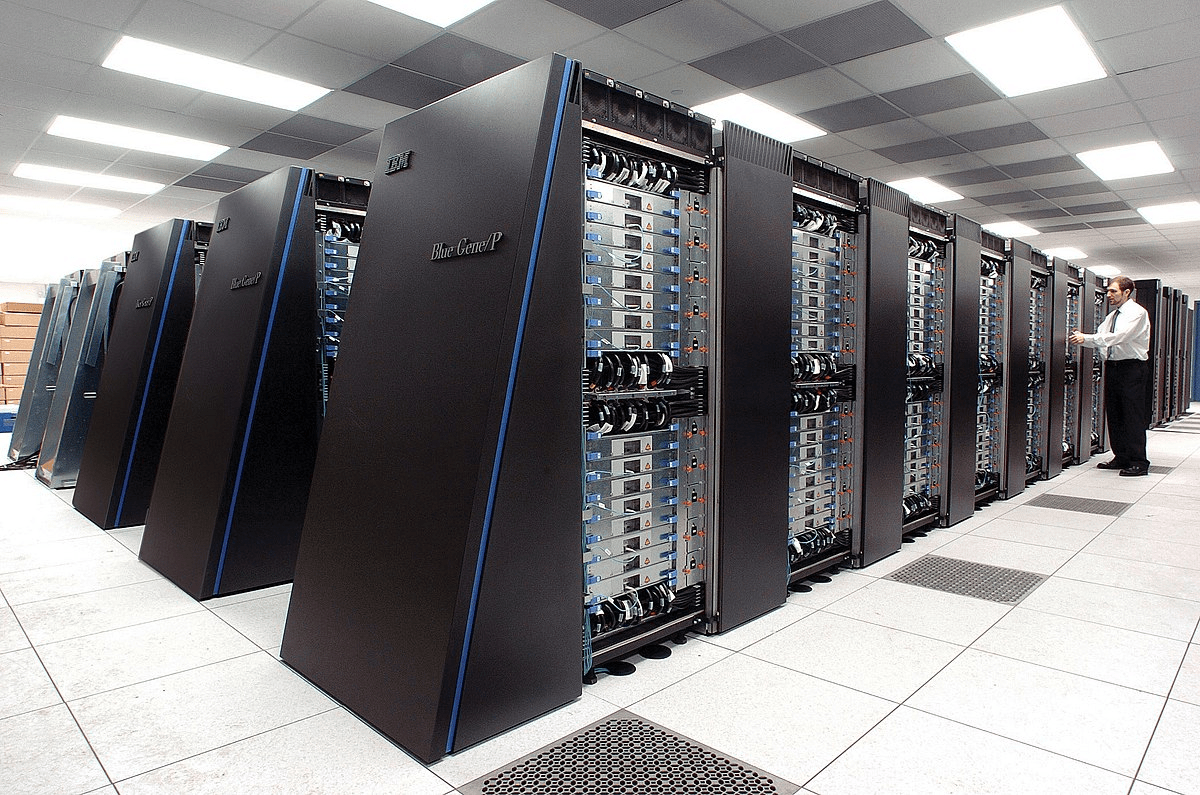

In 2021, researchers at Oak Ridge National Laboratory in the US developed a multi-GPU flood forecasting model, TRITON, using the Summit supercomputer. Despite utilizing 64 nodes, TRITON achieved only a sixfold speed increase.

In contrast, Nan’s model combined multiple GPUs within a single node to counteract performance constraints. His team implemented the architecture on a domestic x86 platform using Hygon processors (32 cores, 64 threads, 2.5 GHz) and domestic GPUs supported by 128GB of memory and 200 Gb/s network bandwidth. With just seven nodes, their model achieved a sixfold speed increase—an 89% reduction in node usage compared to TRITON.

To validate the model, the team simulated a flood evolution process at Zhuangli Reservoir in China’s Shandong province. Utilizing 200 computational nodes and 800 GPUs, they completed the simulation in just three minutes, achieving a staggering speedup of over 160 times.

“Simulating floods at a river basin scale in just minutes means real-time simulations of flood evolution and various rainfall-runoff scenarios can now be conducted more quickly and in greater detail,” Nan explained. “This can enhance flood control and disaster prevention efforts, improve real-time reservoir management, and ultimately reduce loss of life and property.”

The research code has been made available on an open-source platform, and Nan emphasized that the model’s applications could extend beyond flood simulations to areas such as hydrometeorology, sedimentation, and surface-water-groundwater interactions.

“Future work will expand its applications and further test its stability in engineering practices,” he added.

The study was published in the Chinese Journal of Hydraulic Engineering.